“Researchers from the University of Technology Sydney (UTS) have developed biosensor technology that will allow you to operate devices, such as robots and machines, solely through thought-control.” (UTS, 20 March 2023) This was reported by UTS on its website on March 20, 2023. The brain-machine interface was developed by Chin-Teng Lin and Francesca Iacopi (UTS Faculty of Engineering and IT) in collaboration with the Australian Army and Defence Innovation Hub. “The user wears a head-mounted augmented reality lens which displays white flickering squares. By concentrating on a particular square, the brainwaves of the operator are picked up by the biosensor, and a decoder translates the signal into commands.” (UTS, 20 March 2023) According to the website, the technology was demonstrated by the Australian Army, where selected soldiers operated a quadruped robot using the brain-machine interface. “The device allowed hands-free command of the robotic dog with up to 94% accuracy.” (UTS, 20 March 2023) The paper “Noninvasive Sensors for Brain–Machine Interfaces Based on Micropatterned Epitaxial Graphene” can be accessed at pubs.acs.org/doi/10.1021/acsanm.2c05546.

An Investigation of Robotic Hugs

From March 27-29, 2023, the AAAI 2023 Spring Symposia will feature the symposium “Socially Responsible AI for Well-being” by Takashi Kido (Teikyo University, Japan) and Keiki Takadama (The University of Electro-Communications, Japan). The venue is usually Stanford University. For staffing reasons, this year the conference will be held at the Hyatt Regency in San Francisco. On March 28, Prof. Dr. Oliver Bendel will present the paper “Increasing Well-being through Robotic Hugs”, written by himself, Andrea Puljic, Robin Heiz, Furkan Tömen, and Ivan De Paola. From the abstract: “This paper addresses the question of how to increase the acceptability of a robot hug and whether such a hug contributes to well-being. It combines the lead author’s own research with pioneering research by Alexis E. Block and Katherine J. Kuchenbecker. First, the basics of this area are laid out with particular attention to the work of the two scientists. The authors then present HUGGIE Project I, which largely consisted of an online survey with nearly 300 participants, followed by HUGGIE Project II, which involved building a hugging robot and testing it on 136 people. At the end, the results are linked to current research by Block and Kuchenbecker, who have equipped their hugging robot with artificial intelligence to better respond to the needs of subjects.” More information via aaai.org/conference/spring-symposia/sss23/.

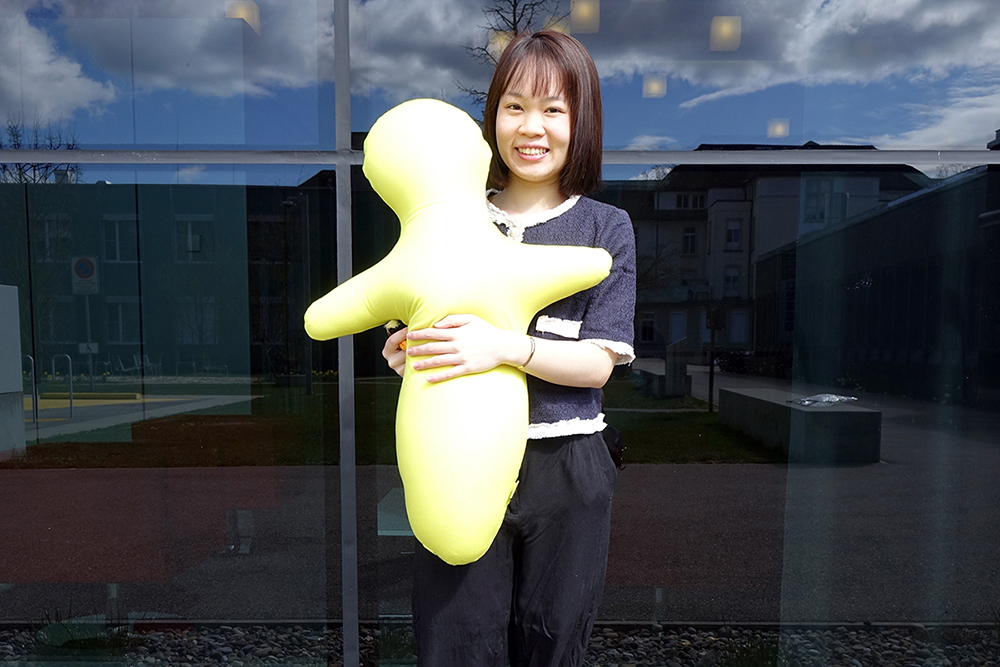

Hugged by a Robot

The HUGGIE project at the School of Business FHNW is moving forward. Under the supervision of Prof. Dr. Oliver Bendel, the team of four (Andrea Puljic, Robin Heiz, Ivan De Paola, Furkan Tömen) will test a hug doll on users until January 2023. It is already known that warmth and softness of the arms and body are desired. Now the team is investigating whether, for example, voice, vibration and smell also increase acceptance. The key question of the practical project is: Can a social robot help increase physical and mental well-being through hugs and touches, and what factors should be taken into account? The basis of HUGGIE is a dressmaker’s dummy. Added to this are clothing, a warming element, a vibration element, and an audio system. In addition, a scent is applied. The arms are moved by invisible strings so that active hugs are possible. Overall, the impression of a hugging robot is created. A trial run with 65 test persons took place in a Swiss company in November 2022. They were hugged by HUGGIE and a giant teddy bear. Based on the results and impressions, the questionnaire and the test setup will be improved. From the end of November 2022, the project will enter its final phase, with implementation and evaluation of the tests.

A Robot Among Penguins

British Filmmaker John Downer has created artificial monkeys, wolves, hippos, turtles, alligators, etc., to observe appropriate wildlife and obtain spectacular images. His well-known robots are very intricately designed and resemble the animals they mimic in almost every detail. It is not necessary to resort to such technically elaborate and artistically demanding means for all species. USA Today reports in a recent article about a robot called ECHO. “ECHO is a remote-controlled ground robot that silently spies on the emperor penguin colony in Atka Bay. The robot is being monitored by the Single Penguin Observation and Tracking observatory. Both the SPOT observatory, which is also remote-operated through a satellite link, and the ECHO robot capture photographs and videos of animal population in the Arctic.” (USA Today, May 6, 2022) ECHO does not resemble a penguin in any way. It is a yellow vehicle with four thick wheels. But as a video shows, the animals seem to have gotten used to it. It comes very close to them without scaring them. Wildlife monitoring using robots is becoming increasingly important, and obviously very different types are being considered.

The Idea of a Tesla Bot

Elon Musk presented the idea of a humanoid robot that – according to Manager Magazin – could take on dangerous, repetitive or boring tasks in the future. The Tesla Bot will be about five feet eight inches (just under 1.73 meters) tall, weigh 57 kilograms, and be able to do numerous jobs, from putting screws on cars to picking up groceries at the store – this is what the German magazine reports. It will be equipped with eight cameras and a full self-driving computer, and will use the same tools Tesla uses in its cars (Manager Magazin, 20 August 2021). According to the announcement, the robot will be able to take over physical work. But that’s exactly what service robots are struggling with at the moment, especially humanoid models. The visualization hardly allows any conclusions to be drawn about the capabilities of the prototype, which is to be available as early as 2022. Eyes and mouths could appear on a large display in the head area and mimic abilities could be implemented. When it is turned off – as seen in the video – the robot appears creepy and unapproachable. Arms and feet are unlikely to be suitable for carrying the body in this form. Joints can also only be seen in rudimentary form. Overall, it is unclear why Tesla, of all companies, should close the gaps that are still present at Sony, SoftBank and Boston Dynamics even after many years.

A Four-legged Robocop

In New York City, police have taken a Boston Dynamics robot on a mission to an apartment building. Spot is a four-legged model that is advanced and looks scary to many people. The operation resulted in the arrest of an armed man. Apparently, the robot had no active role in this. This is reported by Futurism magazine in a recent article. It is also noted there that certain challenges may arise. “The robodog may not have played an active role in the arrest, but having an armed police squadron deploy a robot to an active crime scene raises red flags about civil liberties and the future of policing.” (Futurism, 15 April 2021) Even Boston Dynamics robots are not so advanced that they can play a central role in police operations. They can, however, serve to intimidate. Whether the NYPD is doing itself any favors by doing so can be questioned. The robots’ reputation will certainly not benefit from this kind of use.

The New Life of Hugvie

How can you make social robots out of simple, soft shapes and objects, i.e. robots for interacting with people and animals? Under the supervision of Prof. Dr. Oliver Bendel, Vietnamese students Nhi Hoang Yen Tran and Thang Vu Hoang are investigating this question in the project “Simple, Soft Social Robots” at the School of Business FHNW. They are using Hugvie from the Hiroshi Ishiguro Laboratories as a basis – these labs are particularly famous for the Geminoid and for Erica. But hugging robots like Telenoid also come from them. The latest product from this series is Hugvie. A pocket for a smartphone is attached to its head. People who are far away from each other can talk to each other and have the feeling of hugging and feeling each other. But what else can you do with Hugvie and similar forms? Can you make them conversationalists themselves, can you teach them to move their limbs and be active in other ways? And what does such robotization of simple, soft forms and objects mean for everyday life and society?

Little Sophia

Hanson Robotics is most famous for Sophia. Now the Hong Kong-based company is preparing to launch a new robot. According to the website, Little Sophia is the little sister of Sophia and the newest member of the Hanson Robotics family. “Little Sophia can walk, talk, sing, play games and, like her big sister, even tell jokes! She is a programmable, educational companion for kids, that will inspire children to learn about coding, AI, science, technology, engineering and math through a safe, interactive, human-robot experience. Unlike most educational toys designed by toy companies, Little Sophia is crafted by the same renowned developers, engineers, roboticists and AI scientists that created Sophia the Robot.” (Website Hanson Robotics) In photos, Little Sophia looks even creepier than Sophia. In videos, this impression is no different. The boy in the photo could be Little Sophia’s brother. What is special about her is that she has a wide range of facial expressions. It must be emphasized that, as in the case of Sophia, this is not a virtual face, but a real one. From a technical point of view, this is without a doubt an interesting product.

Hyundai Now Creates Tigers

Hyundai Motor Group has revealed a robot named TIGER, which stands for Transforming Intelligent Ground Excursion Robot. According to the company, it’s the second Ultimate Mobility Vehicle (UMV) and the first designed to be uncrewed. “TIGER’s exceptional capabilities are designed to function as a mobile scientific exploration platform in extreme, remote locations. Based on a modular platform architecture, its features include a sophisticated leg and wheel locomotion system, 360-degree directional control, and a range of sensors for remote observation. It is also intended to connect to unmanned aerial vehicles (UAVs), which can fully charge and deliver TIGER to inaccessible locations.” (Media Release, 10 February 2021) A video can be viewed here. With TIGER, the company has developed a very interesting proof of concept. The combination of legs and wheels in particular could prove to be the solution of the future.

Ariana Grande Bows to “Metropolis”

Ariana Grande walks in the footsteps of Fritz Lang with her video “34+35”. The director and actor is famous for his science fiction film “Metropolis” from 1927, in which a robot transforms into an artificial, human-looking woman, the copy of the real Maria (aka Mary). The video, which quotes the famous role model, was produced by Director X. V Magazine writes: “34+35” is the second track of Grande’s recent release “Positions”, “but it is first in sexually charged metaphors” (V Magazine, 17 November 2020). The robot in the “campy video” has the pretty head of Ariane Grande from the beginning. The point is to bring it to life. This happens in an apparatus reminiscent of the one in “Metropolis”. Of course, Ariana Grande is also the scientist who performs the experiment, so she corresponds to the crazy guy named Rotwang. Some lines in the song suggest that the Ariana Grande robot is a sex robot. “Can you stay up all night?/Fuck me ’til the daylight/Thirty-four, thirty-five”, sings the star from Boca Raton. V Magazine writes: “Presumably, a robot could do such a thing, and that is perhaps what this mechanized lady has been designed for.” (V Magazine, 17 November 2020) The purpose of the artificial Maria is different. She is used as a deceptive robot. As such, she is more in the tradition of the research of Ronald C. Arkin and Oliver Bendel.