A markup language is a machine-readable language for structuring and formatting texts and other data. The best known is the Hypertext Markup Language (HTML). Other well-known artifacts are SSML (for the adaptation of synthetic voices) and AIML (for artificial intelligence applications). We use markup languages to describe properties, affiliations and forms of representation of sections of a text or set of data. This is usually done by marking them with tags. In addition to tags, attributes and values can also be important. A student paper at the School of Business FHNW will describe and compare known markup languages. It will examine whether there is room for further artifacts of this kind. A markup language, which would be suitable for the morality in the written and spoken as well as the morally adequate display of pictures, videos and animations and the playing of sounds, could be called MOML (Morality Markup Language). Is such a language possible and helpful? Can it be used for moral machines? The paper will also deal with this. The supervisor of the project, which will last until the end of the year, is Prof. Dr. Oliver Bendel. Since 2012, he and his teams have created formulas and annotated decision trees for moral machines and a number of moral machines themselves, such as GOODBOT, LIEBOT, BESTBOT, and LADYBIRD.

The Future of Autonomous Driving

Driving in cities is a very complex matter. There are several reasons for this: You have to judge hundreds of objects and events at all times. You have to communicate with people. And you should be able to change decisions spontaneously, for example because you remember that you have to buy something. That’s a bad prospect for an autonomous car. Of course it can do some tricks: It can drive very slowly. It can use virtual tracks or special lanes and signals and sounds. A bus or shuttle is able to use such tricks. But hardly a car. Autonomous individual transport in cities will only be possible if the cities are redesigned. This has been done a few decades ago. And it wasn’t a good idea at all. So don’t let autonomous cars drive in the cities, but let them drive on the highways. Should autonomous cars make moral decisions about the lives and deaths of pedestrians and cyclists? They should better not. Moral machines are a valuable innovation in certain contexts. But not in the traffic of cities. Pedestrians and cyclists rarely get onto the highway. There are many reasons why we should allow autonomous cars only there.

Conversational Agents: Acting on the Wave of Research and Development

The papers of the CHI 2019 workshop “Conversational Agents: Acting on the Wave of Research and Development” (Glasgow, 5 May 2019) are now listed on convagents.org. The extended abstract by Oliver Bendel (School of Business FHNW) entitled “Chatbots as Moral and Immoral Machines” can be downloaded here. The workshop brought together experts from all over the world who are working on the basics of chatbots and voicebots and are implementing them in different ways. Companies such as Microsoft, Mozilla and Salesforce were also present. Approximately 40 extended abstracts were submitted. On 6 May, a bagpipe player opened the four-day conference following the 35 workshops. Dr. Aleks Krotoski, Pillowfort Productions, gave the first keynote. One of the paper sessions in the morning was dedicated to the topic “Values and Design”. All in all, both classical specific fields of applied ethics and the young discipline of machine ethics were represented at the conference. More information via chi2019.acm.org.

Ethical and Statistical Considerations in Models of Moral Judgments

Torty Sivill works at the Computer Science Department, University of Bristol. In August 2019 she published the article “Ethical and Statistical Considerations in Models of Moral Judgments”. “This work extends recent advancements in computational models of moral decision making by using mathematical and philosophical theory to suggest adaptations to state of the art. It demonstrates the importance of model assumptions and considers alternatives to the normal distribution when modeling ethical principles. We show how the ethical theories, utilitarianism and deontology can be embedded into informative prior distributions. We continue to expand the state of the art to consider ethical dilemmas beyond the Trolley Problem and show the adaptations needed to address this complexity. The adaptations made in this work are not solely intended to improve recent models but aim to raise awareness of the importance of interpreting results relative to assumptions made, either implicitly or explicitly, in model construction.” (Abstract) The article can be accessed via https://www.frontiersin.org/articles/10.3389/frobt.2019.00039/full.

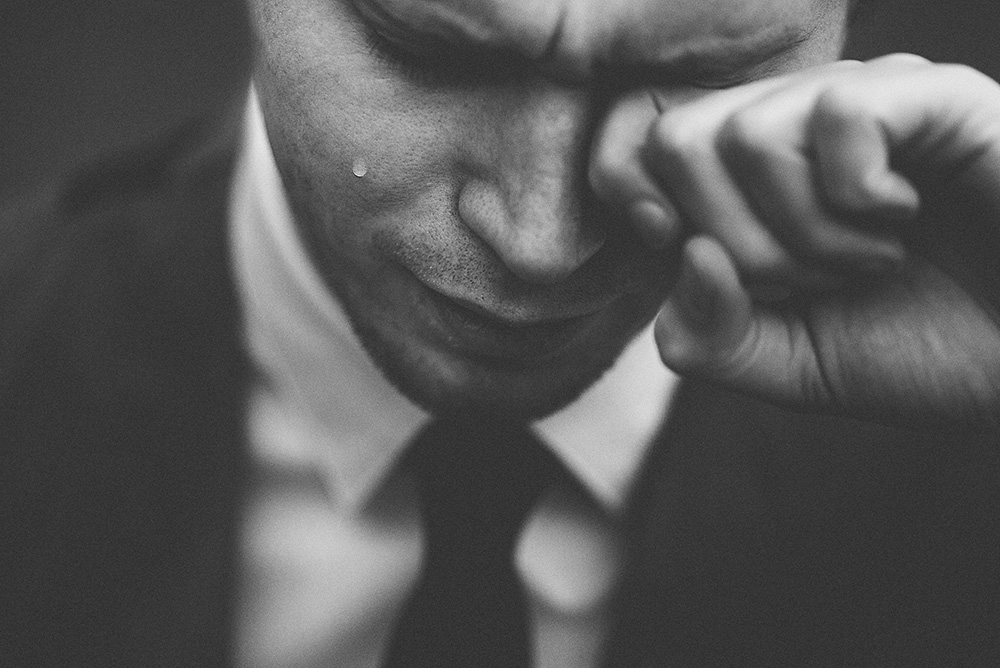

Robots, Empathy and Emotions

“Robots, Empathy and Emotions” – this research project was tendered some time ago. The contract was awarded to a consortium of FHNW, ZHAW and the University of St. Gallen. The applicant, Prof. Dr. Hartmut Schulze from the FHNW School of Applied Psychology, covers the field of psychology. The co-applicant Prof. Dr. Oliver Bendel from the FHNW School of Business takes the perspective of information, robot and machine ethics, the co-applicant Prof. Dr. Maria Schubert from the ZHAW that of nursing science. The client TA-SWISS stated on its website: “What influence do robots … have on our society and on the people who interact with them? Are robots perhaps rather snitches than confidants? … What do we expect from these machines or what can we effectively expect from them? Numerous sociological, psychological, economic, philosophical and legal questions related to the present and future use and potential of robots are still open.” (Website TA-SWISS, own translation) The kick-off meeting with a top-class accompanying group took place in Bern, the capital of Switzerland, on 26 June 2019.

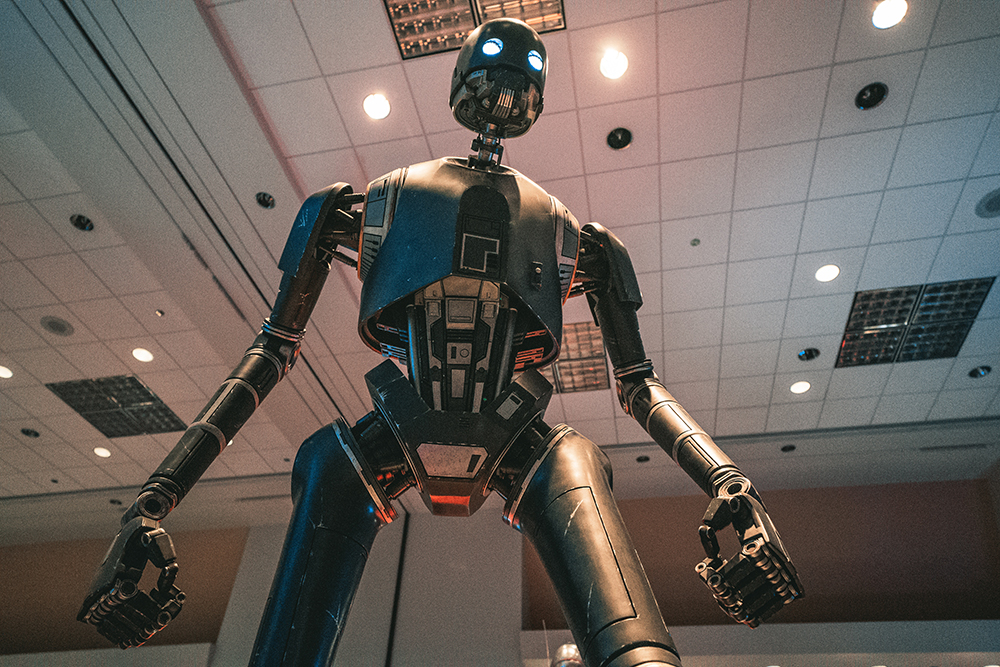

The Relationship between Artificial Intelligence and Machine Ethics

Artificial intelligence has human or animal intelligence as a reference and attempts to represent it in certain aspects. It can also try to deviate from human or animal intelligence, for example by solving problems differently with its systems. Machine ethics is dedicated to machine morality, producing it and investigating it. Whether one likes the concepts and methods of machine ethics or not, one must acknowledge that novel autonomous machines emerge that appear more complete than earlier ones in a certain sense. It is almost surprising that artificial morality did not join artificial intelligence much earlier. Especially machines that simulate human intelligence and human morality for manageable areas of application seem to be a good idea. But what if a superintelligence with a supermorality forms a new species superior to ours? That’s science fiction, of course. But also something that some scientists want to achieve. Basically, it’s important to clarify terms and explain their connections. This is done in a graphics that was published in July 2019 on informationsethik.net and is linked here.

Chatbots in Amsterdam

CONVERSATIONS 2019 is a full-day workshop on chatbot research. It will take place on November 19, 2019 at the University of Amsterdam. From the description: “Chatbots are conversational agents which allow the user access to information and services though natural language dialogue, through text or voice. … Research is crucial in helping realize the potential of chatbots as a means of help and support, information and entertainment, social interaction and relationships. The CONVERSATIONS workshop contributes to this endeavour by providing a cross-disciplinary arena for knowledge exchange by researchers with an interest in chatbots.” The topics of interest that may be explored in the papers and at the workshop include humanlike chatbots, networks of users and chatbots, trustworthy chatbot design and privacy and ethical issues in chatbot design and implementation. More information via conversations2019.wordpress.com/.

Implementing Responsible Research and Innovation for Care Robots

The article “Implementing Responsible Research and Innovation for Care Robots through BS 8611” by Bernd Carsten Stahl is part of the open access book “Pflegeroboter” (published in November 2018). From the abstract: “The concept of responsible research and innovation (RRI) has gained prominence in European research. It has been integrated into the EU’s Horizon 2020 research framework as well as a number of individual Member States’ research strategies. Elsewhere we have discussed how the idea of RRI can be applied to healthcare robots … and we have speculated what such an implementation might look like in social reality … In this paper I will explore how parallel developments reflect the reasoning in RRI. The focus of the paper will therefore be on the recently published standard on ‘Robots and robotic devices: Guide to the ethical design and application of robots and robotic systems’ … I will analyse the standard and discuss how it can be applied to care robots. The key question to be discussed is whether and to what degree this can be seen as an implementation of RRI in the area of care robotics.” Until July 2019 there were 80,000 downloads of the book and individual chapters, which indicates a lively interest in the topic. More information via www.springer.com/de/book/9783658226978.

Development of a Morality Menu

Machine ethics produces moral and immoral machines. The morality is usually fixed, e.g. by programmed meta-rules and rules. The machine is thus capable of certain actions, not others. However, another approach is the morality menu (MOME for short). With this, the owner or user transfers his or her own morality onto the machine. The machine behaves in the same way as he or she would behave, in detail. Together with his teams, Prof. Dr. Oliver Bendel developed several artifacts of machine ethics at his university from 2013 to 2018. For one of them, he designed a morality menu that has not yet been implemented. Another concept exists for a virtual assistant that can make reservations and orders for its owner more or less independently. In the article “The Morality Menu” the author introduces the idea of the morality menu in the context of two concrete machines. Then he discusses advantages and disadvantages and presents possibilities for improvement. A morality menu can be a valuable extension for certain moral machines. You can download the article here. In 2019, a morality menu for a robot will be developed at the School of Business FHNW.

Deceptive Machines

“AI has definitively beaten humans at another of our favorite games. A poker bot, designed by researchers from Facebook’s AI lab and Carnegie Mellon University, has bested some of the world’s top players …” (The Verge, 11 July 2019) According to the magazine, Pluribus was remarkably good at bluffing its opponents. The Wall Street Journal reported: “A new artificial intelligence program is so advanced at a key human skill – deception – that it wiped out five human poker players with one lousy hand.” (Wall Street Journal, 11 July 2019) Of course you don’t have to equate bluffing with cheating – but in this context interesting scientific questions arise. At the conference “Machine Ethics and Machine Law” in 2016 in Krakow, Ronald C. Arkin, Oliver Bendel, Jaap Hage, and Mojca Plesnicar discussed on the panel the question: “Should we develop robots that deceive?” Ron Arkin (who is in military research) and Oliver Bendel (who is not) came to the conclusion that we should – but they had very different arguments. The ethicist from Zurich, inventor of the LIEBOT, advocates free, independent research in which problematic and deceptive machines are also developed, in favour of an important gain in knowledge – but is committed to regulating the areas of application (for example dating portals or military operations). Further information about Pluribus can be found in the paper itself, entitled “Superhuman AI for multiplayer poker”.