The “Handbuch Maschinenethik” (ed. Oliver Bendel) was published by Springer VS over a year ago. It brings together contributions from leading experts in the fields of machine ethics, robot ethics, technology ethics, philosophy of technology and robot law. It has become a comprehensive, exemplary and unique book. In a way, it forms a counterpart to the American research that dominates the discipline: Most of the authors (among them Julian Nida-Rümelin, Catrin Misselhorn, Eric Hilgendorf, Monika Simmler, Armin Grunwald, Matthias Scheutz, Janina Loh and Luís Moniz Pereira) come from Europe and Asia. They had been working on the project since 2017 and submitted their contributions continuously until it went to print. The editor, who has been working on information, robot and machine ethics for 20 years and has been doing intensive research on machine ethics for nine years, is pleased to report that 53,000 downloads have already been recorded – quite a lot for a highly specialized book. The first article for a second edition is also available, namely “The BESTBOT Project” (in English like some other contributions) …

New Journal on AI and Ethics

Springer launches a new journal entitled “AI and Ethics”. This topic has been researched for several years from various perspectives, including information ethics, robot ethics (aka roboethics) and machine ethics. From the description: “AI and Ethics seeks to promote informed debate and discussion of the ethical, regulatory, and policy implications that arise from the development of AI. It will focus on how AI techniques, tools, and technologies are developing, including consideration of where these developments may lead in the future. The journal will provide opportunities for academics, scientists, practitioners, policy makers, and the public to consider how AI might affect our lives in the future, and what implications, benefits, and risks might emerge. Attention will be given to the potential intentional and unintentional misuses of the research and technology presented in articles we publish. Examples of harmful consequences include weaponization, bias in face recognition systems, and discrimination and unfairness with respect to race and gender.

Dangerous Machines or Friendly Companions?

“Dangerous machines or friendly companions? One thing is clear: Our fascination with robots persists. This colloquium in cooperation with TA-SWISS will continue to pursue it.“ This is what it says in the teaser of a text that can be found on the website of the Haus der elektronischen Künste Basel (HeK). “In science fiction films they are presented both as dangerous machines threatening human survival and as friendly companions in our everyday lives. Human-like or animal-like robots, such as the seal Paro, are used in health care, sex robots compensate for the deficits of human relationships, intelligent devices listen to our conversations and take care of our needs …“ (Website HeK) And further: “This event is part of a current study by TA-SWISS which deals with the potentials and risks of social robots that simulate empathy and trigger emotions. The focus is on the new challenges in the relationship between man and machine. At the colloquium, the two scientists Prof. Dr. Oliver Bendel and Prof. Dr. Hartmut Schulze and the Swiss artist Simone C. Niquille will provide brief inputs on the topic and discuss the questions raised in a panel discussion.” (Website HeK) More information via www.hek.ch/en/program/events-en/event/kolloquium-soziale-roboter.html.

Launch of the Interactive AI Magazine

AAAI has announced the launch of the Interactive AI Magazine. According to the organization, the new platform provides online access to articles and columns from AI Magazine, as well as news and articles from AI Topics and other materials from AAAI. “Interactive AI Magazine is a work in progress. We plan to add lot more content on the ecosystem of AI beyond the technical progress represented by the AAAI conference, such as AI applications, AI industry, education in AI, AI ethics, and AI and society, as well as conference calendars and reports, honors and awards, classifieds, obituaries, etc. We also plan to add multimedia such as blogs and podcasts, and make the website more interactive, for example, by enabling commentary on posted articles. We hope that over time Interactive AI Magazine will become both an important source of information on AI and an online forum for conversations among the AI community.” (AAAI Press Release) More information via interactiveaimag.org.

Next ROBOPHILOSOPHY in Helsinki

One of the world’s most important conferences for robot philosophy (aka robophilosophy) and social robotics, ROBOPHILOSOPHY, took place from 18 to 21 August 2020, not in Aarhus (Denmark) as originally planned, but – due to the COVID 19 pandemic – in virtual form. Organizers and presenters were Marco Nørskov and Johanna Seibt. A considerable number of the lectures were devoted to machine ethics, such as “Moral Machines” (Aleksandra Kornienko), “Permissibility-Under-a-Description Reasoning for Deontological Robots” (Felix Lindner) and “The Morality Menu Project” (Oliver Bendel). The keynotes were given by Selma Šabanović (Indiana University Bloomington), Robert Sparrow (Monash University), Shannon Vallor (The University of Edinburgh), Alan Winfield (University of the West of England), Aimee van Wynsberghe (Delft University of Technology) and John Danaher (National University of Ireland). In his outstanding presentation, Winfield was sceptical about moral machines, whereupon Bendel made it clear in the discussion that they are useful in some areas and dangerous in others, and emphasized the importance of machine ethics for the study of machine and human morality, a point with which Winfield again agreed. The last conference was held in Vienna in 2018. Keynote speakers at that time included Hiroshi Ishiguro, Joanna Bryson and Oliver Bendel. The next ROBOPHILOSOPHY will probably take place in 2022 at the University of Helsinki, as the organisers announced at the end of the event.

The MOML Project

In many cases it is important that an autonomous system acts and reacts adequately from a moral point of view. There are some artifacts of machine ethics, e.g., GOODBOT or LADYBIRD by Oliver Bendel or Nao as a care robot by Susan Leigh and Michael Anderson. But there is no standardization in the field of moral machines yet. The MOML project, initiated by Oliver Bendel, is trying to work in this direction. In the management summary of his bachelor thesis Simon Giller writes: “We present a literature review in the areas of machine ethics and markup languages which shaped the proposed morality markup language (MOML). To overcome the most substantial problem of varying moral concepts, MOML uses the idea of the morality menu. The menu lets humans define moral rules and transfer them to an autonomous system to create a proxy morality. Analysing MOML excerpts allowed us to develop an XML schema which we then tested in a test scenario. The outcome is an XML based morality markup language for autonomous agents. Future projects can use this language or extend it. Using the schema, anyone can write MOML documents and validate them. Finally, we discuss new opportunities, applications and concerns related to the use of MOML. Future work could develop a controlled vocabulary or an ontology defining terms and commands for MOML.” The bachelor thesis will be publicly available in autumn 2020. It was supervised by Dr. Elzbieta Pustulka. There will also be a paper with the results next year.

Towards a Proxy Machine

“Once we place so-called ‘social robots’ into the social practices of our everyday lives and lifeworlds, we create complex, and possibly irreversible, interventions in the physical and semantic spaces of human culture and sociality. The long-term socio-cultural consequences of these interventions is currently impossible to gauge.” (Website Robophilosophy Conference) With these words the next Robophilosophy conference was announced. It would have taken place in Aarhus, Denmark, from 18 to 21 August 2019, but due to the COVID 19 pandemic it is being conducted online. One lecture will be given by Oliver Bendel. The abstract of the paper “The Morality Menu Project” states: “Machine ethics produces moral machines. The machine morality is usually fixed. Another approach is the morality menu (MOME). With this, owners or users transfer their own morality onto the machine, for example a social robot. The machine acts in the same way as they would act, in detail. A team at the School of Business FHNW implemented a MOME for the MOBO chatbot. In this article, the author introduces the idea of the MOME, presents the MOBO-MOME project and discusses advantages and disadvantages of such an approach. It turns out that a morality menu can be a valuable extension for certain moral machines.” In 2018 Hiroshi Ishiguro, Guy Standing, Catelijne Muller, Joanna Bryson, and Oliver Bendel had been keynote speakers. In 2020, Catrin Misselhorn, Selma Sabanovic, and Shannon Vallor will be presenting. More information via conferences.au.dk/robo-philosophy/.

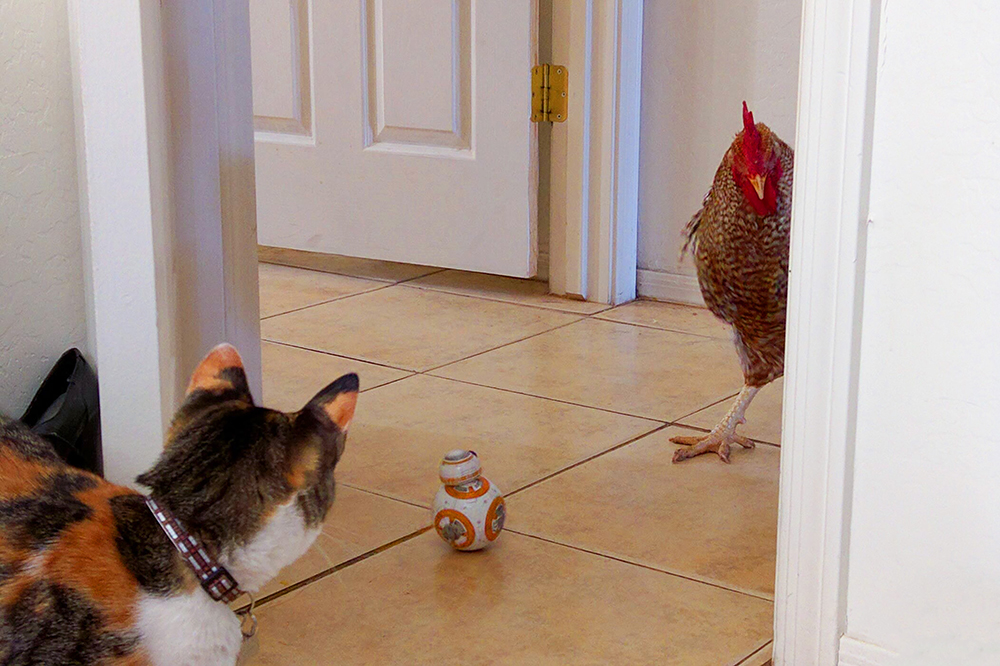

Towards Animal-machine Interaction

Animal-machine interaction (AMI) and animal-computer interaction (ACI) are increasingly important research areas. For years, semi-autonomous and autonomous machines have been multiplying all over the world, not only in factories, but also in outdoor areas and in households. Robots in agriculture and service robots, some with artificial intelligence, encounter wild animals, farm animals and pets. Jackie Snow, who writes for New York Times, National Geographic, and Wall Street Journal, talked to several people on the subject last year. In an article for Fast Company, she quoted the ethicists Oliver Bendel (“Handbuch Maschinenethik”) and Peter Singer (“Animal Liberation”). Clara Mancini (“Animal-computer interaction: A manifesto”) also expressed her point of view. The article with the title “AI’s next ethical challenge: how to treat animals” can be accessed here. Today, research is also devoted to social robots. One question is how animals react to them. Human-computer interaction (HCI) experts from Yale University recently looked into this topic. Another question is whether we can create social robots specifically for animals. The first beginnings were made with toys and automatic feeders for pets. Could a social robot replace a contact person for weeks on end? What features should it have? In this context, we must pay attention to animal welfare from the outset. Some animals will love the new freedom, others will hate it.

Online Survey on Hugs by Robots

Embraces by robots are possible if they have two arms, such as Pepper and P-Care, restricted also with one arm. However, the hugs and touches feel different to those made by humans. When one uses warmth and softness, like in the HuggieBot project, the effect improves, but is still not the same. In hugs it is important that another person hugs us (hugging ourselves is totally different), and that this person is in a certain relationship to us. He or she may be strange to us, but there must be trust or desire. Whether this is the case with a robot must be assessed on a case-by-case basis. A multi-stage HUGGIE project is currently underway at the School of Business FHNW under the supervision of Prof. Dr. Oliver Bendel. Ümmühan Korucu and Leonie Brogle started with an online survey that targets the entire German-speaking world. The aim is to gain insights into how people of all ages and sexes judge a hug by a robot. In crises and catastrophes involving prolonged isolation, such as the COVID 19 pandemic, proxy hugs of this kind could well play a role. Prisons and longer journeys through space are also possible fields of applications. Click here for the survey (only in German): ww3.unipark.de/uc/HUGGIE/ …

A Morality Markup Language

There are several markup languages for different applications. The best known is certainly the Hypertext Markup Language (HTML). AIML has established itself in the field of Artificial Intelligence (AI). For synthetic voices SSML is used. The question is whether the possibilities with regard to autonomous systems are exhausted. In the article “The Morality Menu” by Prof. Dr. Oliver Bendel, a Morality Markup Language (MOML) was proposed for the first time. In 2019, a student research project supervised by the information and machine ethicist investigated the possibilities of existing languages with regard to moral aspects and whether a MOML is justified. The results were presented in January 2020. A bachelor thesis at the School of Business FHNW will go one step further from the end of March 2020. In it, the basic features of a Morality Markup Language are to be developed. The basic structure and specific commands will be proposed and described. The application areas, advantages and disadvantages of such a markup language are to be presented. The client of the work is Prof. Dr. Oliver Bendel, supervisor Dr. Elzbieta Pustulka.