A special feature of DALL-E 3 – in the version integrated in ChatGPT Plus – is the translation of the user’s prompt (prompt A) into a prompt of ChatGPT (prompt B), which is listed in each case. Prompt A for the image shown here was “Competition in the sea between two female swimmers with bathing cap, photorealistic”. DALL-E generated three images for this test, each based on prompt B. Prompt B1 read: “Photo of two determined female swimmers in the expansive sea, both wearing bathing caps. Their arms create ripples as they compete fiercely, striving to outpace each other.” Prompt A was obviously continued, but prompt B1 was not accurately executed. Instead of the two female swimmers, there are three. They seem to be closely related – as is often the case with depictions of people from DALL-E 3 – and perhaps they are sisters or triplets. It is also interesting that they are too close to each other (the picture in this post shows a detail). The fourth image was not executed at all, as was the case with a series before. ChatGPT said: “I apologize again, but there were issues generating one of the images based on your description.” Probably ChatGPT generated a prompt B4, which was then denied by DALL-E 3. On the request “Please tell me the prompt generated by ChatGPT that was not executed by DALL-E 3.” comes the answer “I’m sorry for the inconvenience, but I cannot retrieve the exact prompt that was not executed by DALL·E.” … Ideogram censors in a different way. There, the image is created in front of the user’s eyes, and if the AI determines that it contains elements that might be problematic according to its own guidelines, it cancels the creation and advances a tile with a cat. Ethical challenges of image generators are addressed in the article “Image Synthesis from an Ethical Perspective” by Oliver Bendel.

The Chinese Whispers Problem

DALL-E 3 – in the version integrated in ChatGPT Plus – seems to have a Chinese Whispers problem. In a test by Oliver Bendel, the prompt (prompt A) read: “Two female swimmers competing in lake, photorealistic”. ChatGPT, the interface to DALL-E 3, made four prompts out of it ( prompt B1 – B4). Prompt B4 read: “Photo-realistic image of two female swimmers, one with tattoos on her arms and the other with a swim cap, fiercely competing in a lake with lily pads and reeds at the edges. Birds fly overhead, adding to the natural ambiance.” DALL-E 3, on the other hand, turned this prompt into something that had little to do with either this or prompt A. The picture does not show two women, but two men, or a woman and a man with a beard. They do not swim in a race, but argue, standing in a pond or a small lake, furiously waving their arms and going at each other. Water lilies sprawl in front of them, birds flutter above them. Certainly an interesting picture, but produced with such arbitrariness that one wishes for the good old prompt engineering to return (the picture in this post shows a detail). This is exactly what the interface actually wants to replace – but the result is an effect familiar from the Chinese Whispers game.

Moral Issues with Image Generators

The article “Image Synthesis from an Ethical Perspective” by Prof. Dr. Oliver Bendel was submitted on 18 April and accepted on 8 September 2023. It was published on 27 September 2023. From the abstract: “Generative AI has gained a lot of attention in society, business, and science. This trend has increased since 2018, and the big breakthrough came in 2022. In particular, AI-based text and image generators are now widely used. This raises a variety of ethical issues. The present paper first gives an introduction to generative AI and then to applied ethics in this context. Three specific image generators are presented: DALL-E 2, Stable Diffusion, and Midjourney. The author goes into technical details and basic principles, and compares their similarities and differences. This is followed by an ethical discussion. The paper addresses not only risks, but opportunities for generative AI. A summary with an outlook rounds off the article.” The article was published in the long-established and renowned journal AI & Society and can be downloaded here.

Don’t Scan Our Phones

The U.S. civil liberties organization Electronic Frontier Foundation has launched a petition titled “Don’t Scan Our Phones”. The background is Apple’s plan to search users’ phones for photos that show child abuse or are the result of child abuse. In doing so, the company is going even further than Microsoft, which scours the cloud for such material. On its website, the organization writes: “Apple has abandoned its once-famous commitment to security and privacy. The next version of iOS will contain software that scans users’ photos and messages. Under pressure from U.S. law enforcement, Apple has put a backdoor into their encryption system. Sign our petition and tell Apple to stop its plan to scan our phones. Users need to speak up say this violation of our privacy is wrong.” (Website Electronic Frontier Foundation) More information via act.eff.org/action/tell-apple-don-t-scan-our-phones.

When Robots Flatter the Customer

Under the supervision of Prof. Dr. Oliver Bendel, Liliana Margarida Dos Santos Alves wrote her master thesis “Manipulation by humanoid consulting and sales hardware robots from an ethical perspective” at the School of Business FHNW. The background was that social robots and service robots like Pepper and Paul have been doing their job in retail for years. In principle, they can use the same sales techniques – including those of a manipulative nature – as salespeople. The young scientist submitted her comprehensive study in June 2021. According to the abstract, the main research question (RQ) is “to determine whether it is ethical to intentionally program humanoid consulting and sales hardware robots with manipulation techniques to influence the customer’s purchase decision in retail stores” (Alves 2021). To answer this central question, five sub-questions (SQ) were defined and answered based on an extensive literature review and a survey conducted with potential customers of all ages: “SQ1: How can humanoid consulting and selling robots manipulate customers in the retail store? SQ2: Have ethical guidelines and policies, to which developers and users must adhere, been established already to prevent the manipulation of customers’ purchasing decisions by humanoid robots in the retail sector? SQ3: Have ethical guidelines and policies already been established regarding who must perform the final inspection of the humanoid robot before it is put into operation? SQ4: How do potential retail customers react, think and feel when being confronted with a manipulative humanoid consultant and sales robot in a retail store? SQ5: Do potential customers accept a manipulative and humanoid consultant and sales robot in the retail store?” (Alves 2021) To be able to answer the main research question (RQ), the sub-questions SQ1 – SQ5 were worked through step by step. In the end, the author comes to the conclusion “that it is neither ethical for software developers to program robots with manipulative content nor is it ethical for companies to actively use these kinds of robots in retail stores to systematically and extensively manipulate customers’ negatively in order to obtain an advantage”. “Business is about reciprocity, and it is not acceptable to systematically deceive, exploit and manipulate customers to attain any kind of benefit.” (Alves 2021) The book “Soziale Roboter” – which will be published in September or October 2021 – contains an article on social robots in retail by Prof. Dr. Oliver Bendel. In it, he also mentions the very interesting study.

Reclaim Your Face

The “Reclaim Your Face” alliance, which calls for a ban on biometric facial recognition in public space, has been registered as an official European Citizens’ Initiative. One of the goals is to establish transparency: “Facial recognition is being used across Europe in secretive and discriminatory ways. What tools are being used? Is there evidence that it’s really needed? What is it motivated by?” (Website RYF) Another one is to draw red lines: “Some uses of biometrics are just too harmful: unfair treatment based on how we look, no right to express ourselves freely, being treated as a potential criminal suspect.” (Website RYF) Finally, the initiative demands respect for human: “Biometric mass surveillance is designed to manipulate our behaviour and control what we do. The general public are being used as experimental test subjects. We demand respect for our free will and free choices.” (Website RYF) In recent years, the use of facial recognition techniques have been the subject of critical reflection, such as in the paper “The Uncanny Return of Physiognomy” presented at the 2018 AAAI Spring Symposia or in the chapter “Some Ethical and Legal Issues of FRT” published in the book “Face Recognition Technology” in 2020. More information at reclaimyourface.eu.

New Journal on AI and Ethics

Springer launches a new journal entitled “AI and Ethics”. This topic has been researched for several years from various perspectives, including information ethics, robot ethics (aka roboethics) and machine ethics. From the description: “AI and Ethics seeks to promote informed debate and discussion of the ethical, regulatory, and policy implications that arise from the development of AI. It will focus on how AI techniques, tools, and technologies are developing, including consideration of where these developments may lead in the future. The journal will provide opportunities for academics, scientists, practitioners, policy makers, and the public to consider how AI might affect our lives in the future, and what implications, benefits, and risks might emerge. Attention will be given to the potential intentional and unintentional misuses of the research and technology presented in articles we publish. Examples of harmful consequences include weaponization, bias in face recognition systems, and discrimination and unfairness with respect to race and gender.

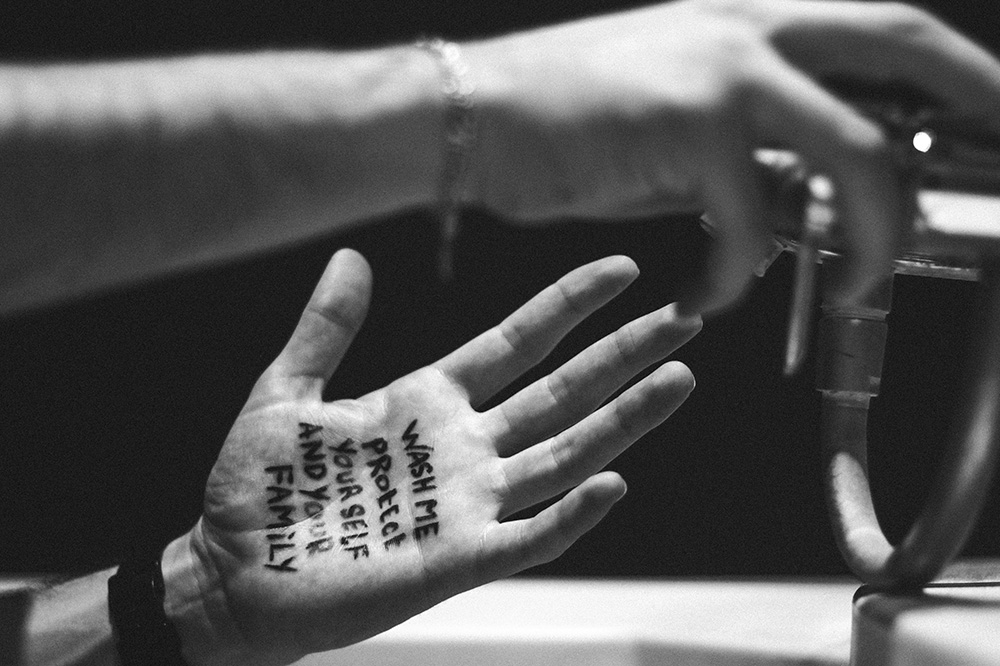

Show Me Your Hands

Fujitsu has developed an artificial intelligence system that could ensure healthcare, hotel and food industry workers scrub their hands properly. This could support the fight against the COVID-19 pandemic. “The AI, which can recognize complex hand movements and can even detect when people aren’t using soap, was under development before the coronavirus outbreak for Japanese companies implementing stricter hygiene regulations … It is based on crime surveillance technology that can detect suspicious body movements.” (Reuters, 19 June 2020) Genta Suzuki, a senior researcher at the Japanese information technology company, told the news agency that the AI can’t identify people from their hands, but it could be coupled with identity recognition technology so companies could keep track of employees’ washing habits. Maybe in the future it won’t be our parents who will show us how to wash ourselves properly, but robots and AI systems. Or they save themselves this detour and clean us directly.

IBM will Stop Developing or Selling Facial Recognition Technology

IBM will stop developing or selling facial recognition software due to concerns the technology is used to support racism. This was reported by MIT Technology Review on 9 June 2020. In a letter to Congress, IBM’s CEO Arvind Krishna wrote: “IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency. We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.” (Letter to Congress, 8 June 2020) The extraordinary letter “also called for new federal rules to crack down on police misconduct, and more training and education for in-demand skills to improve economic opportunities for people of color” (MIT Technology Review, 9 June 2020). A talk at Stanford University in 2018 warned against the return of physiognomy in connection with face recognition. The paper is available here.

Considerations on Bodyhacking

In the case of bodyhacking one intervenes invasively or non-invasively in the animal or human body, often in the sense of animal or human enhancement and sometimes with the ideology of transhumanism. It is about physical and psychological transformation, and it can result in the animal or human cyborg. Oliver Bendel wrote an article on bio- and bodyhacking for Bosch-Zünder, the legendary associate magazine that has been around since 1919. It was published in March 2020 in ten languages, in German, but also in English, Chinese, and Japanese. Some time ago, Oliver Bendel had already emphasized: “From the perspective of bio-, medical, technical, and information ethics, bodyhacking can be seen as an attempt to shape and improve one’s own or others’ lives and experiences. It becomes problematic as soon as social, political or economic pressure arises, for example when the wearing of a chip for storing data and for identification becomes the norm, which hardly anyone can avoid.” (Gabler Wirtschaftslexikon) He has recently published a scientific paper on the subject in the German Journal HMD. More about Bosch-Zünder at www.bosch.com/de/stories/bosch-zuender-mitarbeiterzeitung/.