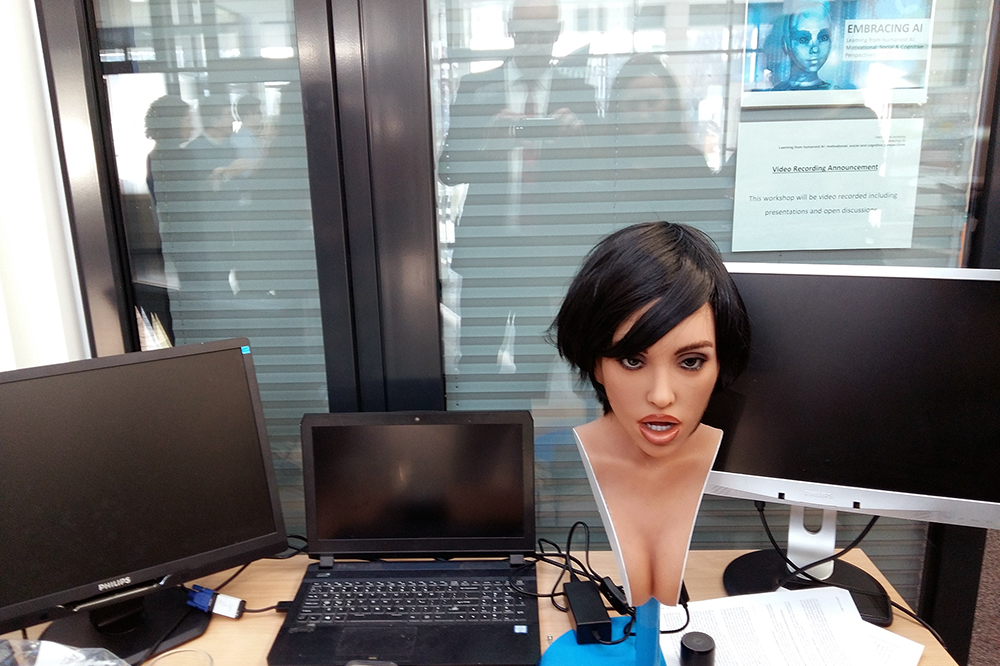

The international workshop “Learning from Humanoid AI: Motivational, Social & Cognitive Perspectives” took place from 30 November – 1 December 2019 at the University of Potsdam. Dr. Jessica Szczuka raised the question: “What do men and women see in sex robots?” … Her talk was based on the paper “Jealousy 4.0? An empirical study on jealousy-related discomfort of women evoked by other women and gynoid robots” by herself and Nicole Krämer. In their introduction the authors write: “In a paper discussing machine ethics, Bendel asked whether it is ‘possible to be unfaithful to the human love partner with a sex robot, and can a man or a woman be jealous because of the robot’s other love affairs?’ … In this line, the present study aims to empirically investigate whether women perceive robots as potential competitors to their relationship in the same way as they perceive other women to be so. As the degree of human-likeness of robots contributes to the similarity between female-looking robots and women, we additionally investigated differences between machine-like female-looking robots and human-like female-looking robots with respect to their ability to evoke jealousy-related discomfort.” (Paper) The paper can be accessed here.

Robophilosophy 2020

“Once we place so-called ‘social robots’ into the social practices of our everyday lives and lifeworlds, we create complex, and possibly irreversible, interventions in the physical and semantic spaces of human culture and sociality. The long-term socio-cultural consequences of these interventions is currently impossible to gauge.” (Website Robophilosophy Conference) With these words the next Robophilosophy conference is announced. It will take place from 18 to 21 August 2019 in Aarhus, Denmark. The CfP raises questions like that: “How can we create cultural dynamics with or through social robots that will not impact our value landscape negatively? How can we develop social robotics applications that are culturally sustainable? If cultural sustainability is relative to a community, what can we expect in a global robot market? Could we design human-robot interactions in ways that will positively cultivate the values we, or people anywhere, care about?” (Website Robophilosophy Conference) In 2018 Hiroshi Ishiguro, Guy Standing, Catelijne Muller, Joanna Bryson, and Oliver Bendel had been keynote speakers. In 2020, Catrin Misselhorn, Selma Sabanovic, and Shannon Vallor will be presenting. More information via conferences.au.dk/robo-philosophy/.

How to Improve Robot Hugs

Hugs are very important to many of us. We are embraced by familiar and strange people. When we hug ourselves, it does not have the same effect. And when a robot hugs us, it has no effect at all – or we don’t feel comfortable. But you can change that a bit. Alexis E. Block and Katherine J. Kuchenbecker from the Max Planck Institute for Intelligent Systems have published a paper on a research project in this field. The purpose of the project was to evaluate human responses to different robot physical characteristics and hugging behaviors. “Analysis of the results showed that people significantly prefer soft, warm hugs over hard, cold hugs. Furthermore, users prefer hugs that physically squeeze them and release immediately when they are ready for the hug to end. Taking part in the experiment also significantly increased positive user opinions of robots and robot use.” (Abstract) The paper “Softness, Warmth, and Responsiveness Improve Robot Hugs” was published in the International Journal of Social Robotics in January 2019 (First Online: 25 October 2018). It is available via link.springer.com/article/10.1007/s12369-018-0495-2.

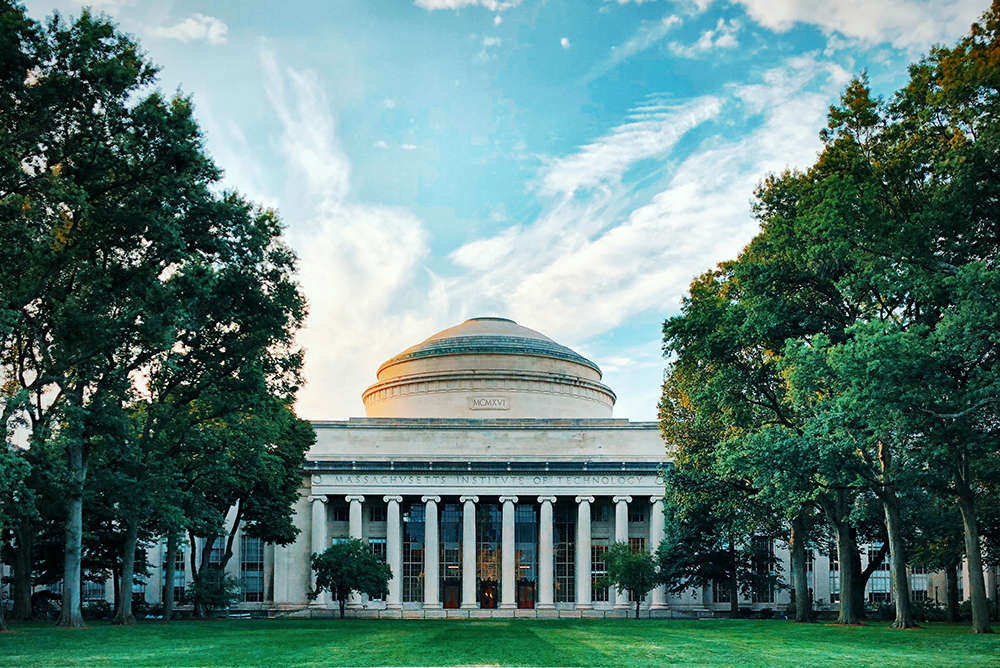

Ethics in AI for Kids and Teens

In summer 2019, Blakeley Payne ran a very special course at MIT. According to an article in Quartz magazine, the graduate student had created an AI ethics curriculum to make kids and teens aware of how AI systems mediate their everyday lives. “By starting early, she hopes the kids will become more conscious of how AI is designed and how it can manipulate them. These lessons also help prepare them for the jobs of the future, and potentially become AI designers rather than just consumers.” (Quartz, 4 September 2019) Not everyone is convinced that artificial intelligence is the right topic for kids and teens. “Some argue that developing kindness, citizenship, or even a foreign language might serve students better than learning AI systems that could be outdated by the time they graduate. But Payne sees middle school as a unique time to start kids understanding the world they live in: it’s around ages 10 to 14 year that kids start to experience higher-level thoughts and deal with complex moral reasoning. And most of them have smartphones loaded with all sorts of AI.” (Quartz, 4 September 2019) There is no doubt that the MIT course could be a role model for schools around the world. The renowned university once again seems to be setting new standards.

The New Dangers of Face Recognition

The dangers of face recognition are discussed more and more. A new initiative is aimed at banning the use of technology to monitor the American population. The AI Now Institute already warned of the risks in 2018, as did Oliver Bendel. The ethicist had a particular use in mind. In the 21st century, face recognition is increasingly attempted to connect to the pseudoscience of physiognomy, which has its origins in ancient times. From the appearance of persons, a conclusion is drawn to their inner self, and attempts are made to identify character traits, personality traits and temperament, or political and sexual orientation. Biometrics plays a role in this concept. It was founded in the eighteenth century, when physiognomy under the lead of Johann Caspar Lavater had its dubious climax. In his paper “The Uncanny Return of Physiognomy”, Oliver Bendel elaborates the basic principles of this topic; selected projects from research and practice are presented and, from an ethical perspective, the possibilities of face recognition are subjected to fundamental critique in this context, including the above examples. The philosopher presented his paper on 27 March 2018 at Stanford University (“AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents”, AAAI 2018 Spring Symposium Series). The whole volume is available here.

Fighting Deepfakes with Deepfakes

A deepfake (or deep fake) is a picture or video created with the help of artificial intelligence that looks authentic but is not. Also the methods and techniques in this context are labeled with the term. Machine learning and especially deep learning are used. With deepfakes one wants to create objects of art and visual objects or means for discreditation, manipulation and propaganda. Politics and pornography are therefore closely interwoven with the phenomenon. According to Futurism, Facebook is teaming up with a consortium of Microsoft researchers and several prominent universities for a “Deepfake Detection Challenge”. “The idea is to build a data set, with the help of human user input, that’ll help neural networks detect what is and isn’t a deepfake. The end result, if all goes well, will be a system that can reliably fake videos online. Similar data sets already exist for object or speech recognition, but there isn’t one specifically made for detecting deepfakes yet.” (Futurism, 5 September 2019) The winning team will get a prize – presumably a higher sum of money. Facebook is investing a total of 10 million dollars in the competition.

The Fight against Plastic in the Seas

The pollution of water by plastic is a topic that has been in the media for a few years now. In 2015, the School of Engineering FHNW and the School of Business FHNW investigated whether a robotic fish – like Oliver Bendel’s CLEANINGFISH (2014) – could be a solution. In 2018, the information and machine ethicist commissioned another work to investigate several existing or planned projects dealing with marine pollution. Rolf Stucki’s final thesis in the EUT study program was based on “a literature research on the current state of the plastics problem worldwide and its effects, but also on the properties and advantages of plastics” (Management Summary, own translation). “In addition, interviews were conducted with representatives of the projects. In order to assess the internal company factors (strengths, weaknesses) and external environmental factors (opportunities, risks), SWOT analyses were prepared on the basis of the answers and the research” (Management Summary) According to Stucki, the results show that most projects are financially dependent on sponsors and donors. Two of them are in the concept phase; they should prove their technical and financial feasibility in the medium term. With regard to social commitment, it can be said that all six projects are very active. A poster shows a comparison (the photos were alienated for publication in this blog). WasteShark stands out among these projects as a robot. He is, so to speak, the CLEANINGFISH who has become reality.

Robots, Empathy and Emotions

“Robots, Empathy and Emotions” – this research project was tendered some time ago. The contract was awarded to a consortium of FHNW, ZHAW and the University of St. Gallen. The applicant, Prof. Dr. Hartmut Schulze from the FHNW School of Applied Psychology, covers the field of psychology. The co-applicant Prof. Dr. Oliver Bendel from the FHNW School of Business takes the perspective of information, robot and machine ethics, the co-applicant Prof. Dr. Maria Schubert from the ZHAW that of nursing science. The client TA-SWISS stated on its website: “What influence do robots … have on our society and on the people who interact with them? Are robots perhaps rather snitches than confidants? … What do we expect from these machines or what can we effectively expect from them? Numerous sociological, psychological, economic, philosophical and legal questions related to the present and future use and potential of robots are still open.” (Website TA-SWISS, own translation) The kick-off meeting with a top-class accompanying group took place in Bern, the capital of Switzerland, on 26 June 2019.

About Basic Property

The title of one of the AAAI 2019 Spring Symposia was “Interpretable AI for Well-Being: Understanding Cognitive Bias and Social Embeddedness”. An important keyword here is “social embeddedness”. Social embeddedness of AI includes issues like “AI and future economics (such as basic income, impact of AI on GDP)” or “well-being society (such as happiness of citizen, life quality)”. In his paper “Are Robot Tax, Basic Income or Basic Property Solutions to the Social Problems of Automation?”, Oliver Bendel discusses and criticizes these approaches in the context of automation and digitization. Moreover, he develops a relatively unknown proposal, unconditional basic property, and presents its potentials as well as its risks. The lecture by Oliver Bendel took place on 26 March 2019 at Stanford University and led to lively discussions. It was nominated for the “best presentation”. The paper has now been published as a preprint and can be downloaded here.

No Solution to the Nursing Crisis

“In Germany, around four million people will be dependent on care and nursing in 2030. Already today there is talk of a nursing crisis, which is likely to intensify further in view of demographic developments in the coming years. Fewer and fewer young people will be available to the labour market as potential carers for the elderly. Experts estimate that there will be a shortage of around half a million nursing staff in Germany by 2030. Given these dramatic forecasts, are nursing robots possibly the solution to the problem? Scientists from the disciplines of computer science, robotics, medicine, nursing science, social psychology, and philosophy explored this question at a Berlin conference of the Daimler and Benz Foundation. The machine ethicist and conference leader Professor Oliver Bendel first of all stated that many people had completely wrong ideas about care robots: ‘In the media there are often pictures or illustrations that do not correspond to reality’.” (Die Welt, 14 June 2019) With these words an article in the German newspaper Die Welt begins. Norbert Lossau provides a detailed description of the Berlin Colloquium, which took place on 22 May 2019. The article is available in both English and German. So, are robots the answer to the nursing crisis? Oliver Bendel would disagree. They can be useful for caregivers and patients. However, they do not solve the major issues.