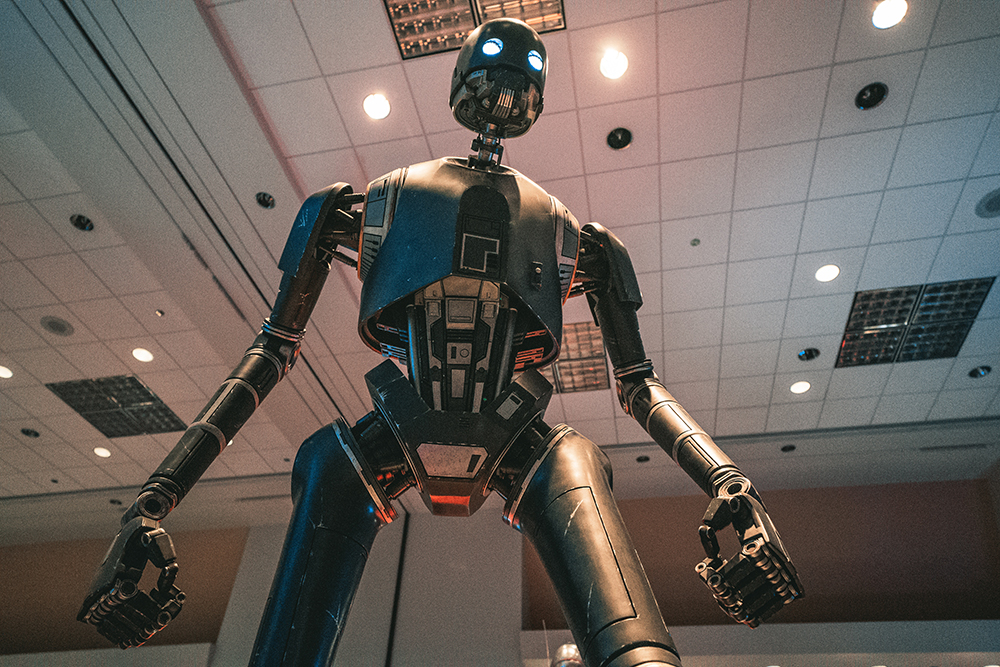

Amazon Rekognition is a well-known software for facial recognition, including emotion detection. It is used in the BESTBOT, a moral machine that hides an immoral machine. The immoral is precisely caused by facial recognition, which endangers the privacy of the user and his or her informational autonomy. The project is intended not least to draw attention to this risk. Amazon announced on 12 August 2019 that it has improved and expanded its system: “Today, we are launching accuracy and functionality improvements to our face analysis features. Face analysis generates metadata about detected faces in the form of gender, age range, emotions, attributes such as ‘Smile’, face pose, face image quality and face landmarks. With this release, we have further improved the accuracy of gender identification. In addition, we have improved accuracy for emotion detection (for all 7 emotions: ‘Happy’, ‘Sad’, ‘Angry’, ‘Surprised’, ‘Disgusted’, ‘Calm’ and ‘Confused’) and added a new emotion: ‘Fear’.” (Amazon, 12 August 2019) Because the BESTBOT accesses other systems such as MS Face API and Kairos, it can already recognize fear. So the change at Amazon means no change for this artifact of machine ethics.

Conversational Agents: Acting on the Wave of Research and Development

The papers of the CHI 2019 workshop “Conversational Agents: Acting on the Wave of Research and Development” (Glasgow, 5 May 2019) are now listed on convagents.org. The extended abstract by Oliver Bendel (School of Business FHNW) entitled “Chatbots as Moral and Immoral Machines” can be downloaded here. The workshop brought together experts from all over the world who are working on the basics of chatbots and voicebots and are implementing them in different ways. Companies such as Microsoft, Mozilla and Salesforce were also present. Approximately 40 extended abstracts were submitted. On 6 May, a bagpipe player opened the four-day conference following the 35 workshops. Dr. Aleks Krotoski, Pillowfort Productions, gave the first keynote. One of the paper sessions in the morning was dedicated to the topic “Values and Design”. All in all, both classical specific fields of applied ethics and the young discipline of machine ethics were represented at the conference. More information via chi2019.acm.org.

Robots, Empathy and Emotions

“Robots, Empathy and Emotions” – this research project was tendered some time ago. The contract was awarded to a consortium of FHNW, ZHAW and the University of St. Gallen. The applicant, Prof. Dr. Hartmut Schulze from the FHNW School of Applied Psychology, covers the field of psychology. The co-applicant Prof. Dr. Oliver Bendel from the FHNW School of Business takes the perspective of information, robot and machine ethics, the co-applicant Prof. Dr. Maria Schubert from the ZHAW that of nursing science. The client TA-SWISS stated on its website: “What influence do robots … have on our society and on the people who interact with them? Are robots perhaps rather snitches than confidants? … What do we expect from these machines or what can we effectively expect from them? Numerous sociological, psychological, economic, philosophical and legal questions related to the present and future use and potential of robots are still open.” (Website TA-SWISS, own translation) The kick-off meeting with a top-class accompanying group took place in Bern, the capital of Switzerland, on 26 June 2019.

The Relationship between Artificial Intelligence and Machine Ethics

Artificial intelligence has human or animal intelligence as a reference and attempts to represent it in certain aspects. It can also try to deviate from human or animal intelligence, for example by solving problems differently with its systems. Machine ethics is dedicated to machine morality, producing it and investigating it. Whether one likes the concepts and methods of machine ethics or not, one must acknowledge that novel autonomous machines emerge that appear more complete than earlier ones in a certain sense. It is almost surprising that artificial morality did not join artificial intelligence much earlier. Especially machines that simulate human intelligence and human morality for manageable areas of application seem to be a good idea. But what if a superintelligence with a supermorality forms a new species superior to ours? That’s science fiction, of course. But also something that some scientists want to achieve. Basically, it’s important to clarify terms and explain their connections. This is done in a graphics that was published in July 2019 on informationsethik.net and is linked here.

Deceptive Machines

“AI has definitively beaten humans at another of our favorite games. A poker bot, designed by researchers from Facebook’s AI lab and Carnegie Mellon University, has bested some of the world’s top players …” (The Verge, 11 July 2019) According to the magazine, Pluribus was remarkably good at bluffing its opponents. The Wall Street Journal reported: “A new artificial intelligence program is so advanced at a key human skill – deception – that it wiped out five human poker players with one lousy hand.” (Wall Street Journal, 11 July 2019) Of course you don’t have to equate bluffing with cheating – but in this context interesting scientific questions arise. At the conference “Machine Ethics and Machine Law” in 2016 in Krakow, Ronald C. Arkin, Oliver Bendel, Jaap Hage, and Mojca Plesnicar discussed on the panel the question: “Should we develop robots that deceive?” Ron Arkin (who is in military research) and Oliver Bendel (who is not) came to the conclusion that we should – but they had very different arguments. The ethicist from Zurich, inventor of the LIEBOT, advocates free, independent research in which problematic and deceptive machines are also developed, in favour of an important gain in knowledge – but is committed to regulating the areas of application (for example dating portals or military operations). Further information about Pluribus can be found in the paper itself, entitled “Superhuman AI for multiplayer poker”.