Since 2012, Prof. Dr. Oliver Bendel has been building chatbots and voice assistants – partly with his students and partly on his own. These have been discussed by the media and found interesting by NASA. He gained his theoretical knowledge and practical illustrative material from his doctorate on this topic a quarter of a century ago. Since 2022, the focus has been on dialog systems for dead and endangered languages. Under his supervision, Karim N’diaye developed the chatbot @ve for Latin and Dalil Jabou the chatbot @llegra for Vallader, an idiom of Rhaeto-Romanic, enhanced with voice output. Since May 2024, he has been testing the scope of GPTs – “custom versions of ChatGPT”, as OpenAI calls them – for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. Prototypes have already been created for all three, namely Irish Girl, Maori Girl, and Adelina (for Basque). He is also investigating the potential for extinct languages such as Egyptian and Akkadian. The GPTs do not readily communicate in hieroglyphics or cuneiform, but they can certainly represent and explain signs of visual languages. It is even possible to enter entire sentences and ask how they can be improved. The result is then – to stay with Akkadian – complex structures made up of cuneiform characters. H@mmur@pi specializes in this language. He is also familiar with the culture and history of the region.

Teaching and Learning with GPTs

In the spring semester of 2024, Prof Dr Oliver Bendel integrated virtual tutors into his teaching. These were ‘custom versions of ChatGPT’, so-called GPTs. Social Robotics Girl was available for the elective modules on social robotics, created in November 2023, and Digital Ethics Girl from February 2024 for the compulsory modules “Ethik und Recht” and ‘Ethics and Law’ within the Wirtschaftsinformatik and Business Information Technology degree programmes (FHNW School of Business) and “Recht und Ethik” within Geomatics (FHNW School of Architecture, Construction and Geomatics). The virtual tutors have the “world knowledge” of GPT-4, but also the specific expertise of the technology philosopher and business information scientist from Zurich. It has been shown that the GPTs can provide certain impulses and loosen up the lessons. They show their particular strength in group work, where students no longer have to consult their lecturer’s books – which is hardly useful when there is a lot of time pressure – but can ask them specific questions. Last but not least, there are opportunities for self-regulated learning. The paper “How Can GenAI Foster Well-being in Self-regulated Learning?” by Stefanie Hauske and Oliver Bendel was published in May 2024 – it was submitted to the AAAI Spring Symposia in December 2023 and presented at Stanford University at the end of March 2024.

Maori Girl Can Speak and Write Maori

Conversational agents have been the subject of Prof. Dr. Oliver Bendel’s research for a quarter of a century. He dedicated his doctoral thesis at the University of St. Gallen from the end of 1999 to the end of 2022 to them – or more precisely to pedagogical agents, which would probably be called virtual learning companions today. He has been a professor at the FHNW School of Business since 2009. From 2012, he mainly developed chatbots and voice assistants in the context of machine ethics, including GOODBOT, LIEBOT, BESTBOT, and SPACE THEA. In 2022, the information systems specialist and philosopher of technology then turned his attention to dead and endangered languages. Under his supervision, Karim N’diaye developed the chatbot @ve for Latin and Dalil Jabou the chatbot @llegra for Vallader, an idiom of Rhaeto-Romanic, enhanced with voice output. He is currently testing the range of GPTs – “customized versions of ChatGPT”, as OpenAI calls them – for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 9, 2024 – one week after Irish Girl – a first version of Maori Girl was created. At first glance, it seems to have a good grasp of the Polynesian language of the indigenous people of New Zealand. You can have the answers translated into English or German. Maori Girl is available in the GPT Store and will be further improved over the next few weeks

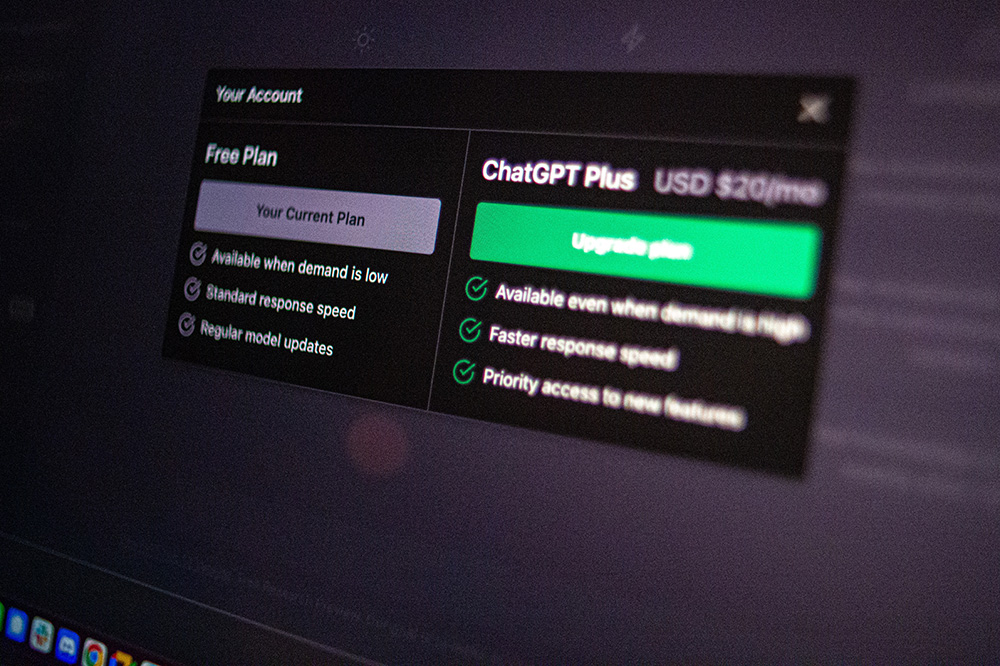

GPTs as Virtual Tutors

In the spring semester of 2024, Prof. Dr. Oliver Bendel will integrate virtual tutors into his courses at the FHNW. These are “custom versions of ChatGPT”, so-called GPTs. Social Robotics Girl is available for the elective modules on social robotics, and Digital Ethics Girl for the compulsory modules “Ethik und Recht” and “Ethics and Law” within the Wirtschaftsinformatik and Business Information Technology degree programmes (FHNW School of Business) and “Recht und Ethik” within Geomatics (FHNW School of Architecture, Civil Engineering and Geomatics). The virtual tutors have the “world knowledge” of GPT-4, but also the specific expertise of the technology philosopher and business information systems scientist from Zurich. A quarter of a century ago, he worked on his doctoral thesis at the University of St. Gallen on pedagogical agents, i.e., chatbots, voice assistants, and early forms of social robots in the learning sector. Together with Stefanie Hauske from the ZHAW, he recently wrote the paper “How Can GenAI Foster Well-being in Self-regulated Learning?”, which was accepted at the AAAI 2024 Spring Symposium “Impact of GenAI on Social and Individual Well-being” at Stanford University and will be presented on site at the end of March. It is not about teaching at universities, but about the self-regulated learning of employees in companies.

ChatGPT can See, Hear, and Speak

OpenAI reported on September 25, 2023 in its blog: “We are beginning to roll out new voice and image capabilities in ChatGPT. They offer a new, more intuitive type of interface by allowing you to have a voice conversation or show ChatGPT what you’re talking about.” (OpenAI Blog, 25 September 2023) The company gives some examples of using ChatGPT in everyday life: “Snap a picture of a landmark while traveling and have a live conversation about what’s interesting about it. When you’re home, snap pictures of your fridge and pantry to figure out what’s for dinner (and ask follow up questions for a step by step recipe). After dinner, help your child with a math problem by taking a photo, circling the problem set, and having it share hints with both of you.” (OpenAI Blog, 25 September 2023) But the application can not only see, it can also hear and speak: “You can now use voice to engage in a back-and-forth conversation with your assistant. Speak with it on the go, request a bedtime story for your family, or settle a dinner table debate.” (OpenAI Blog, 25 September 2023) More information via openai.com/blog/chatgpt-can-now-see-hear-and-speak.

GPT-4 as Multimodal Model

GPT-4 was launched by OpenAI on March 14, 2023. “GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.” (Website OpenAI) On its website, the company explains the multimodal options in more detail: “GPT-4 can accept a prompt of text and images, which – parallel to the text-only setting – lets the user specify any vision or language task. Specifically, it generates text outputs (natural language, code, etc.) given inputs consisting of interspersed text and images.” (Website OpenAI) The example that OpenAI gives is impressive. An image with multiple panels was uploaded. The prompt is: “What is funny about this image? Describe it panel by panel”. This is exactly what GPT-4 does and then comes to the conclusion: “The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.” (Website OpenAI) The technical report is available via cdn.openai.com/papers/gpt-4.pdf.

Introducing Visual ChatGPT

Researchers at Microsoft are working on a new application based on ChatGPT and solutions like Stable Diffusion. Visual ChatGPT is designed to allow users to generate images using text input and then edit individual elements. In their paper “Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models” Chenfei Wu and his co-authors write: “We build a system called Visual ChatGPT, incorporating different Visual Foundation Models, to enable the user to interact with ChatGPT by 1) sending and receiving not only languages but also images 2) providing complex visual questions or visual editing instructions that require the collaboration of multiple AI models with multi-steps” – and, not to forget: “3) providing feedback and asking for corrected results” (Wu et al. 2023). For example, one lets an appropriate prompt create an image of a landscape, with blue sky, hills, meadows, flowers, and trees. Then, one instructs Visual ChatGPT with another prompt to make the hills higher and the sky more dusky and cloudy. One can also ask the program what color the flowers are and color them with another prompt. A final prompt makes the trees in the foreground appear greener. The paper can be downloaded from arxiv.org.