The paper “Co-Robots as Care Robots” by Oliver Bendel, Alina Gasser and Joel Siebenmann, accepted at the AAAI 2020 Spring Symposium “Applied AI in Healthcare: Safety, Community, and the Environment”, can be accessed via arxiv.org/abs/2004.04374. From the abstract: “Cooperation and collaboration robots, co-robots or cobots for short, are an integral part of factories. For example, they work closely with the fitters in the automotive sector, and everyone does what they do best. However, the novel robots are not only relevant in production and logistics, but also in the service sector, especially where proximity between them and the users is desired or unavoidable. For decades, individual solutions of a very different kind have been developed in care. Now experts are increasingly relying on co-robots and teaching them the special tasks that are involved in care or therapy. This article presents the advantages, but also the disadvantages of co-robots in care and support, and provides information with regard to human-robot interaction and communication. The article is based on a model that has already been tested in various nursing and retirement homes, namely Lio from F&P Robotics, and uses results from accompanying studies. The authors can show that co-robots are ideal for care and support in many ways. Of course, it is also important to consider a few points in order to guarantee functionality and acceptance.” Due to the outbreak of the COVID-19 pandemic, the physical meeting to be held at Stanford University was postponed. It will take place in November 2020 in Washington (AAAI 2020 Fall Symposium Series).

WHO Fights COVID-19 Misinformation with Viber Chatbot

A new WHO chatbot on Rakuten Viber aims to get accurate information about COVID-19 to people in several languages. “Once subscribed to the WHO Viber chatbot, users will receive notifications with the latest news and information directly from WHO. Users can also learn how to protect themselves and test their knowledge on coronavirus through an interactive quiz that helps bust myths. Another goal of the partnership is to fight misinformation.” (Website WHO) Some days ago, the Centers for Disease Control and Prevention of the United States Department of Health and Human Services have launched a chatbot that helps people decide what to do if they have potential Coronavirus symptoms such as fever, cough, or shortness of breath. However, this dialog system is only intended for people who are permanently or temporarily in the USA. The new WHO chatbot is freely available in English, Russian and Arabic with more than 20 languages to be added.

The Coronavirus Chatbot

The Centers for Disease Control and Prevention of the United States Department of Health and Human Services have launched a chatbot that will help people decide what to do if they have potential Coronavirus symptoms such as fever, cough, or shortness of breath. This was reported by the magazine MIT Technology Review on 24 March 2020. “The hope is the self-checker bot will act as a form of triage for increasingly strained health-care services.” (MIT Technology Review, 24 March 2020) According to the magazine, the chatbot asks users questions about their age, gender, and location, and about any symptoms they’re experiencing. It also inquires whether they may have met someone diagnosed with COVID-19. On the basis of the users’ replies, it recommends the best next step. “The bot is not supposed to replace assessment by a doctor and isn’t intended to be used for diagnosis or treatment purposes, but it could help figure out who most urgently needs medical attention and relieve some of the pressure on hospitals.” (MIT Technology Review, 24 March 2020) The service is intended for people who are currently located in the US. International research is being done not only on useful but also on moral chatbots.

The BESTBOT on Mars

Living, working, and sleeping in small spaces next to the same people for months or years would be stressful for even the fittest and toughest astronauts. Neel V. Patel underlines this fact in a current article for MIT Technology Review. If they are near Earth, they can talk to psychologists. But if they are far away, it will be difficult. Moreover, in the future there could be astronauts in space whose clients cannot afford human psychological support. “An AI assistant that’s able to intuit human emotion and respond with empathy could be exactly what’s needed, particularly on future missions to Mars and beyond. The idea is that it could anticipate the needs of the crew and intervene if their mental health seems at risk.” (MIT Technology Review, 14 January 2020) NASA wants to develop such an assistant together with the Australian tech firm Akin. They could build on research by Oliver Bendel. Together with his teams, he has developed the GOODBOT in 2013 and the BESTBOT in 2018. Both can detect users’ problems and react adequately to them. The more recent chatbot even has face recognition in combination with emotion recognition. If it detects discrepancies with what the user has said or written, it will make this a subject of discussion. The BESTBOT on Mars – it would like that.

The Old, New Neons

The company Neon picks up an old concept with its Neons, namely that of avatars. Twenty years ago, Oliver Bendel distinguished between two different types in the Lexikon der Wirtschaftsinformatik. With reference to the second, he wrote: “Avatars, on the other hand, can represent any figure with certain functions. Such avatars appear on the Internet – for example as customer advisors and newsreaders – or populate the adventure worlds of computer games as game partners and opponents. They often have an anthropomorphic appearance and independent behaviour or even real characters …” (Lexikon der Wirtschaftsinformatik, 2001, own translation) It is precisely this type that the company, which is part of the Samsung Group and was founded by Pranav Mistry, is now adapting, taking advantage of today’s possibilities. “These are virtual figures that are generated entirely on the computer and are supposed to react autonomously in real time; Mistry spoke of a latency of less than 20 milliseconds.” (Heise Online, 8 January 2019, own translation) The neons are supposed to show emotions (as do some social robots that are conquering the market) and thus facilitate and strengthen bonds. “The AI-driven character is neither a language assistant a la Bixby nor an interface to the Internet. Instead, it is a friend who can speak several languages, learn new skills and connect to other services, Mistry explained at CES.” (Heise Online, 8 January 2019, own translation)

AI Workshop at the University of Potsdam

In 2018, Dr. Yuefang Zhou and Prof. Dr. Martin Fischer initiated the first international workshop on intimate human-robot relations at the University of Potsdam, “which resulted in the publication of an edited book on developments in human-robot intimate relationships”. This year, Prof. Dr. Martin Fischer, Prof. Dr. Rebecca Lazarides, and Dr. Yuefang Zhou are organizing the second edition. “As interest in the topic of humanoid AI continues to grow, the scope of the workshop has widened. During this year’s workshop, international experts from a variety of different disciplines will share their insights on motivational, social and cognitive aspects of learning, with a focus on humanoid intelligent tutoring systems and social learning companions/robots.” (Website Embracing AI) The international workshop “Learning from Humanoid AI: Motivational, Social & Cognitive Perspectives” will take place on 29 and 30 November 2019 at the University of Potsdam. Keynote speakers are Prof. Dr. Tony Belpaeme, Prof. Dr. Oliver Bendel, Prof. Dr. Angelo Cangelosi, Dr. Gabriella Cortellessa, Dr. Kate Devlin, Prof. Dr. Verena Hafner, Dr. Nicolas Spatola, Dr. Jessica Szczuka, and Prof. Dr. Agnieszka Wykowska. Further information is available at embracingai.wordpress.com/.

Talk to Transformer

Artificial intelligence is spreading into more and more application areas. American scientists have now developed a system that can supplement texts: “Talk to Transformer”. The user enters a few sentences – and the AI system adds further passages. “The system is based on a method called DeepQA, which is based on the observation of patterns in the data. This method has its limitations, however, and the system is only effective for data on the order of 2 million words, according to a recent news article. For instance, researchers say that the system cannot cope with the large amounts of data from an academic paper. Researchers have also been unable to use this method to augment texts from academic sources. As a result, DeepQA will have limited application, according to the researchers. The scientists also note that there are more applications available in the field of text augmentation, such as automatic transcription, the ability to translate text from one language to another and to translate text into other languages.” The sentences in quotation marks are not from the author of this blog. They were written by the AI system itself. You can try it via talktotransformer.com.

Honey, I shrunk the AI

Some months ago, researchers at the University of Massachusetts showed the climate toll of machine learning, especially deep learning. Training Google’s BERT, with its 340 million data parameters, emitted nearly as much carbon as a round-trip flight between the East and West coasts. According to Technology Review, the trend could also accelerate the concentration of AI research into the hands of a few big tech companies. “Under-resourced labs in academia or countries with fewer resources simply don’t have the means to use or develop such computationally expensive models.” (Technology Review, 4 October 2019) In response, some researchers are focused on shrinking the size of existing models without losing their capabilities. The magazine wrote enthusiastically: “Honey, I shrunk the AI” (Technology Review, 4 October 2019) There are advantages not only with regard to the environment and to the access to state-of-the-art AI. According to Technology Review, tiny models will help bring the latest AI advancements to consumer devices. “They avoid the need to send consumer data to the cloud, which improves both speed and privacy. For natural-language models specifically, more powerful text prediction and language generation could improve myriad applications like autocomplete on your phone and voice assistants like Alexa and Google Assistant.” (Technology Review, 4 October 2019)

Towards Full Body Fakes

“Within the field of deepfakes, or ‘synthetic media’ as researchers call it, much of the attention has been focused on fake faces potentially wreaking havoc on political reality, as well as other deep learning algorithms that can, for instance, mimic a person’s writing style and voice. But yet another branch of synthetic media technology is fast evolving: full body deepfakes.” (Fast Company, 21 September 2019) Last year, researchers from the University of California Berkeley demonstrated in an impressive way how deep learning can be used to transfer dance moves from a professional onto the bodies of amateurs. Also in 2018, a team from the University of Heidelberg published a paper on teaching machines to realistically render human movements. And in spring of this year, a Japanese company developed an AI that can generate whole body models of nonexistent persons. “While it’s clear that full body deepfakes have interesting commercial applications, like deepfake dancing apps or in fields like athletics and biomedical research, malicious use cases are an increasing concern amid today’s polarized political climate riven by disinformation and fake news.” (Fast Company, 21 September 2019) Was anyone really in this area, did he or she really take part in a demonstration and throw stones? In the future you won’t know for sure.

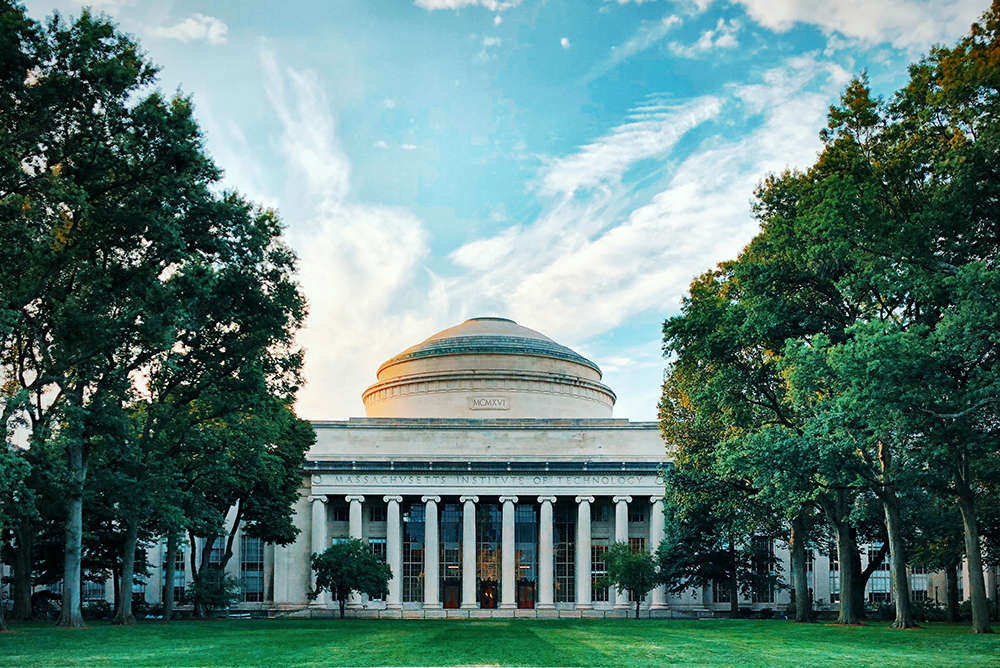

Ethics in AI for Kids and Teens

In summer 2019, Blakeley Payne ran a very special course at MIT. According to an article in Quartz magazine, the graduate student had created an AI ethics curriculum to make kids and teens aware of how AI systems mediate their everyday lives. “By starting early, she hopes the kids will become more conscious of how AI is designed and how it can manipulate them. These lessons also help prepare them for the jobs of the future, and potentially become AI designers rather than just consumers.” (Quartz, 4 September 2019) Not everyone is convinced that artificial intelligence is the right topic for kids and teens. “Some argue that developing kindness, citizenship, or even a foreign language might serve students better than learning AI systems that could be outdated by the time they graduate. But Payne sees middle school as a unique time to start kids understanding the world they live in: it’s around ages 10 to 14 year that kids start to experience higher-level thoughts and deal with complex moral reasoning. And most of them have smartphones loaded with all sorts of AI.” (Quartz, 4 September 2019) There is no doubt that the MIT course could be a role model for schools around the world. The renowned university once again seems to be setting new standards.