NAO and Pepper from Aldebaran – part of the United Robotics Group – are flagships of social robotics. The smaller one saw the light of day in 2006 (in the meantime version 6 is available), the larger one in 2014, and they are both adored at university events such as Prof. Dr. Oliver Bendel’s elective modules on social robotics at the FHNW or in companies. Everyone appreciates the cartoonish design and natural language skills. And they laugh their heads off when they stroke Pepper and it says it feels like a cat. For some time now, there have been robots with two separate displays for the eyes, such as Alpha Mini, or with a display for the mouth, such as Navel. Unitree Go2 and Unitree H1 have amazing mobility. The community is asking when NAO II and Pepper II will be released, not as new versions but as new models. However, it’s a bit like the VW Beetle. It is very demanding to reissue a classic, and old fans are quickly disappointed, as the New Beetle and Beetle II have shown. NAO II and Pepper II should also have two separate displays for their eyes. The Navel displays, which have a three-dimensional effect, can be used as a model. For Pepper, displays for the mouth and cheeks could also be considered. This would allow it to display facial expressions and blush when you pay it a compliment. Or it would smile when you stroked it. The bodies would also need a general overhaul. Both social robots should – Cozmo is a role model here – look alive at all times. And both should at least be able to grasp and hold something, like a Unitree H1. At the same time, both should be instantly recognizable. VW has probably learned from the reactions of disappointed fans. Perhaps the companies should sit down together. And bring a Beetle III, a NAO II, and a Pepper II onto the market in 2025.

The Animal Whisperer

When humans come into contact with wildlife, farm animals, and pets, they sometimes run the risk of being injured or killed. They may be attacked by bears, wolves, cows, horses, or dogs. Experts can use an animal’s body language to determine whether or not danger is imminent. Context is also important, such as whether a mother cow is with her calves. The multimodality of large language models enables novel applications. For example, ChatGPT can evaluate images. This ability can be used to interpret the body language of animals, thus using and replacing expert knowledge. Prof. Dr. Oliver Bendel, who has been involved with animal-computer interaction and animal-machine interaction for many years, has initiated a project called “The Animal Whisperer” in this context. The goal is to create a prototype application based on GenAI that can be used to interpret the body language of an animal and avert danger for humans. GPT-4 or an open source language model should be used to create the prototype. It should be augmented with appropriate material, taking into account animals such as bears, wolves, cows, horses, and dogs. Approaches may include fine-tuning or rapid engineering. The project will begin in March 2024 and the results will be available in the summer of the same year (Image: DALL-E 3).

Goodbye Apple Car, Hello GenAI

Apple’s ambitions to enter the automotive business are apparently history. This is reported by Bloomberg. “Apple Inc. is canceling a decadelong effort to build an electric car, according to people with knowledge of the matter, abandoning one of the most ambitious projects in the history of the company.” (Bloomberg, 27 February 2024) Numerous media outlets around the world have picked up the story. The company actually wanted to launch an autonomous electric car on the market. Apple never communicated this publicly, but it was common knowledge. The project as part of the Special Projects Group (SPG) is now to be wound up and the remaining employees are to focus on the area of generative AI in future, where Apple wants to catch up in the coming months. So you could say: goodbye Apple car, hello GenAI.

Start of the European AI Office

The European AI Office was established in February 2024. The European Commission’s website states: “The European AI Office will be the center of AI expertise across the EU. It will play a key role in implementing the AI Act – especially for general-purpose AI – foster the development and use of trustworthy AI, and international cooperation.” (European Commission, February 22, 2024) And further: “The European AI Office will support the development and use of trustworthy AI, while protecting against AI risks. The AI Office was established within the European Commission as the center of AI expertise and forms the foundation for a single European AI governance system.” (European Commission, February 22, 2024) According to the EU, it wants to ensure that AI is safe and trustworthy. The AI Act is the world’s first comprehensive legal framework for AI that guarantees the health, safety and fundamental rights of people and provides legal certainty for companies in the 27 member states.

Saving Languages with Language Models

On February 19, 2024, the article “@llegra: a chatbot for Vallader” by Oliver Bendel and Dalil Jabou was published in the International Journal of Information Technology. From the abstract: “Extinct and endangered languages have been preserved primarily through audio conservation and the collection and digitization of scripts and have been promoted through targeted language acquisition efforts. Another possibility would be to build conversational agents like chatbots or voice assistants that can master these languages. This would provide an artificial, active conversational partner which has knowledge of the vocabulary and grammar and allows one to learn with it in a different way. The chatbot, @llegra, with which one can communicate in the Rhaeto-Romanic idiom Vallader was developed in 2023 based on GPT-4. It can process and output text and has voice output. It was additionally equipped with a manually created knowledge base. After laying the conceptual groundwork, this paper presents the preparation and implementation of the project. In addition, it summarizes the tests that native speakers conducted with the chatbot. A critical discussion elaborates advantages and disadvantages. @llegra could be a new tool for teaching and learning Vallader in a memorable and entertaining way through dialog. It not only masters the idiom, but also has extensive knowledge about the Lower Engadine, that is, the area where Vallader is spoken. In conclusion, it is argued that conversational agents are an innovative approach to promoting and preserving languages.” Oliver Bendel has been increasingly focusing on dead, extinct and endangered languages for some time. He believes that conversational agents can help to strengthen and save them.

Bao in Switzerland

From February 15 to 17, 2024, the elective module “Social Robots from a Technical, Economic and Ethical Perspective” by Prof. Dr. Oliver Bendel took place at the FHNW School of Business in Brugg-Windisch. This was the sixth time the course had been held and the first time it had taken place on this campus. The participants were mainly prospective business economists. A GPT called Social Robotics Girl, which is specialized in the topic, was available. A presentation by Claude Toussaint from Navel Robotics enriched the event. He spoke not only about the development of the model, but also about the financing of the company. Among the robots at the demo was Unitree Go2. It comes from the Social Robots Lab (SRL) privately funded by Oliver Bendel and is called Bao (Chinese for “treasure, jewel”) by him. The SRL also includes Alpha Mini, Cozmo, and Hugvie – and the voice-controlled Vector, which was not used in the lessons. When designing their own social robots, the students worked with image generators, this time mainly with DALL-E 3.

Generative AI for the Blind

The paper “How Can Generative AI Enhance the Well-being of the Blind?” by Oliver Bendel is now available as a preprint at arxiv.org/abs/2402.07919. It was accepted at the AAAI 2024 Spring Symposium “Impact of GenAI on Social and Individual Well-being”. From the abstract: “This paper examines the question of how generative AI can improve the well-being of blind or visually impaired people. It refers to a current example, the Be My Eyes app, in which the Be My AI feature was integrated in 2023, which is based on GPT-4 from OpenAI. The author’s tests are described and evaluated. There is also an ethical and social discussion. The power of the tool, which can analyze still images in an amazing way, is demonstrated. Those affected gain a new independence and a new perception of their environment. At the same time, they are dependent on the world view and morality of the provider or developer, who prescribe or deny them certain descriptions. An outlook makes it clear that the analysis of moving images will mean a further leap forward. It is fair to say that generative AI can fundamentally improve the well-being of blind and visually impaired people and will change it in various ways.” Oliver Bendel will present the paper at Stanford University on March 25-27. It is his ninth consecutive appearance at the AAAI Spring Symposia, which this time consists of eight symposia on artificial intelligence.

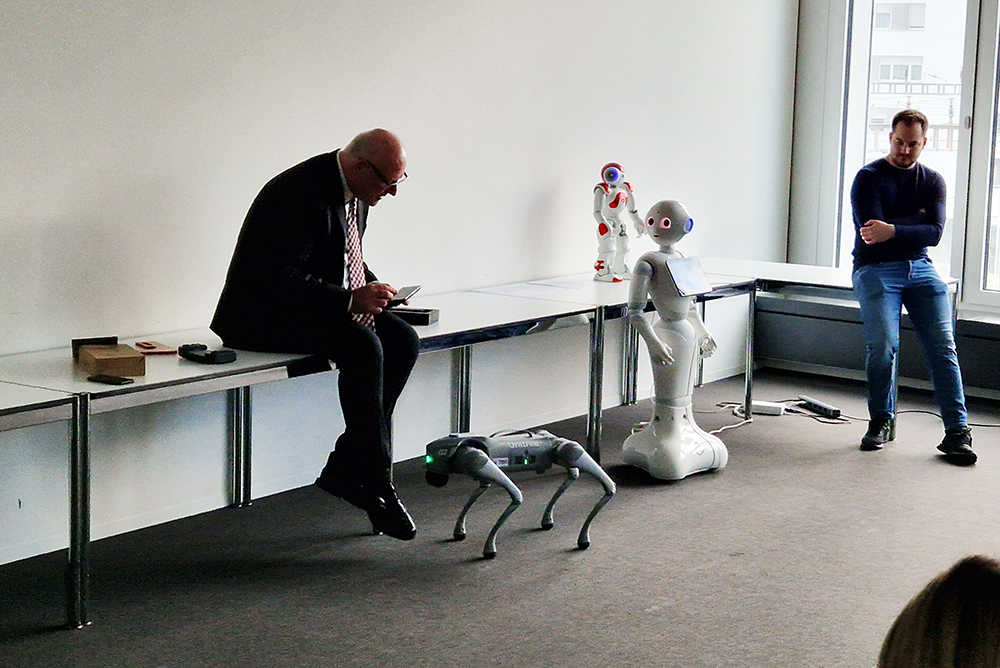

Elective Module “Social Robots”

From February 12 to 14, 2024, the elective module “Social Robots” took place at the FHNW School of Business in Olten. This was the fifth time it had been held. This time, a GPT specialized in the topic was available for the first time. Prof. Dr. Oliver Bendel repeatedly had it explain the facts and then made additions. Social Robotics Girl, as she is called, was also used by the students in their group work. In this way, they benefited from the lecturer’s knowledge without having to search for the right text passages or call him in. Among the robots at the demo was Unitree Go2 for the first time. It stole the show from all the others, NAO, Pepper and even Alpha Mini. This was mainly due to its fast, fluid movements, its diverse forms of behavior and its surprising tricks. The robot comes from the Social Robots Lab privately funded by Prof. Dr. Oliver Bendel and is called Bao (Chinese for “treasure, jewel”) by him. As in 2023, image generators were used to design their own social robots, this time mainly with DALL-E 3 because some of the students had ChatGPT Plus access. The sixth edition will take place in Brugg-Windisch from February 15, 2024. It will mainly be attended by prospective business economists (Photo: Armend Nasufi).

A Robot Boat to Clean the Rivers

Millions of tons of plastic waste float down polluted urban rivers and industrial waterways and into the world’s oceans every year. According to Microsoft, a Hong Kong-based startup has come up with a solution to help stem these devastating waste flows. “Open Ocean Engineering has developed Clearbot Neo – a sleek AI-enabled robotic boat that autonomously collects tons of floating garbage that otherwise would wash into the Pacific from the territory’s busy harbor.” (Deayton 2023) After a long period of development, its inventors plan to scale up and have fleets of Clearbot Neos cleaning up and protecting waters around the globe. The start-up’s efforts are commendable. However, polluted rivers and harbors are not the only problem. A large proportion of plastic waste comes from the fishing industry. This was proven last year by The Ocean Cleanup project. So there are several places to start: We need to avoid plastic waste. Fishing activities must be reduced. And rivers, lakes and oceans must be cleared of plastic waste (Image: DALL-E 3).

GPTs as Virtual Tutors

In the spring semester of 2024, Prof. Dr. Oliver Bendel will integrate virtual tutors into his courses at the FHNW. These are “custom versions of ChatGPT”, so-called GPTs. Social Robotics Girl is available for the elective modules on social robotics, and Digital Ethics Girl for the compulsory modules “Ethik und Recht” and “Ethics and Law” within the Wirtschaftsinformatik and Business Information Technology degree programmes (FHNW School of Business) and “Recht und Ethik” within Geomatics (FHNW School of Architecture, Civil Engineering and Geomatics). The virtual tutors have the “world knowledge” of GPT-4, but also the specific expertise of the technology philosopher and business information systems scientist from Zurich. A quarter of a century ago, he worked on his doctoral thesis at the University of St. Gallen on pedagogical agents, i.e., chatbots, voice assistants, and early forms of social robots in the learning sector. Together with Stefanie Hauske from the ZHAW, he recently wrote the paper “How Can GenAI Foster Well-being in Self-regulated Learning?”, which was accepted at the AAAI 2024 Spring Symposium “Impact of GenAI on Social and Individual Well-being” at Stanford University and will be presented on site at the end of March. It is not about teaching at universities, but about the self-regulated learning of employees in companies.