Driving in cities is a very complex matter. There are several reasons for this: You have to judge hundreds of objects and events at all times. You have to communicate with people. And you should be able to change decisions spontaneously, for example because you remember that you have to buy something. That’s a bad prospect for an autonomous car. Of course it can do some tricks: It can drive very slowly. It can use virtual tracks or special lanes and signals and sounds. A bus or shuttle is able to use such tricks. But hardly a car. Autonomous individual transport in cities will only be possible if the cities are redesigned. This has been done a few decades ago. And it wasn’t a good idea at all. So don’t let autonomous cars drive in the cities, but let them drive on the highways. Should autonomous cars make moral decisions about the lives and deaths of pedestrians and cyclists? They should better not. Moral machines are a valuable innovation in certain contexts. But not in the traffic of cities. Pedestrians and cyclists rarely get onto the highway. There are many reasons why we should allow autonomous cars only there.

Atlas Does a Handstand

In a new video, Boston Dynamics shows its humanoid Atlas performing various gymnastics exercises. This was reported by Heise on 26 September 2019 with reference to various sources. The well-known robot is 1.50 meters high and weighs 80 kilograms. It has two legs and two arms and the impression of a head. Among other things, it does a handstand and several somersaults, and it jumps with rotation around its own axis. Obviously, it can do complex physical movements typically only possible for humans. According to Heise, a new optimization algorithm, which translates certain maneuvers into executable reference movements, enables this progress in movements. Boston Dynamics is part of SoftBank. The Japanese company also manufactures Pepper and Nao, the well-known robots of the formerly independent French company Aldebaran Robotics.

Robots for Climate

On 20 September 2019 FridaysforFuture had called for worldwide climate strikes. Hundreds of thousands of people around the world took to the streets to protest for more sustainable industry and long-term climate policies to combat global warming. Technological progress and the protection of the environment do not necessarily have to contradict. Quite the opposite, we will present 3 robots which show that technology can be used to achieve climate goals. Planting trees is the most efficient strategy to recover biodiversity and stop climate change. However, this method requires lots of human power. The GrowBot automates this task resulting in a planting rate that is 10 times faster than trained human planters. Unique from planting drones, this small truck-like robot not only spreads seeds, but it plants small trees into the soil, giving them a better chance to survive and foster reforestation. The bio-inspired Row-bot converts organic matter into operating power just as the water boatman (a bug). The robot’s engine is based on a microbial fuel cell (MFC) which enables the robot to swim. Researchers from the Bristol Robotics Laboratory developed the 3D-printed Row-bot for environmental clean-up operations such as harmful algal bloom, oil spills or monitoring the impact of (natural or man-made) environmental catastrophes. Next-level-recycling like in the movie WALL·E can be expected with the sorting robot RoCycle which is developed at MIT. Other than classic recycling machines the robot is capable of distinguishing paper, plastic and metal carbage by using pressure sensors. This tactile solution is 85% accurate at detecting in stationary use and 63% when attached to an assembly line. Through cameras and magnets, the researchers aim to optimise recycling to help cleaning Earth.

Towards Full Body Fakes

“Within the field of deepfakes, or ‘synthetic media’ as researchers call it, much of the attention has been focused on fake faces potentially wreaking havoc on political reality, as well as other deep learning algorithms that can, for instance, mimic a person’s writing style and voice. But yet another branch of synthetic media technology is fast evolving: full body deepfakes.” (Fast Company, 21 September 2019) Last year, researchers from the University of California Berkeley demonstrated in an impressive way how deep learning can be used to transfer dance moves from a professional onto the bodies of amateurs. Also in 2018, a team from the University of Heidelberg published a paper on teaching machines to realistically render human movements. And in spring of this year, a Japanese company developed an AI that can generate whole body models of nonexistent persons. “While it’s clear that full body deepfakes have interesting commercial applications, like deepfake dancing apps or in fields like athletics and biomedical research, malicious use cases are an increasing concern amid today’s polarized political climate riven by disinformation and fake news.” (Fast Company, 21 September 2019) Was anyone really in this area, did he or she really take part in a demonstration and throw stones? In the future you won’t know for sure.

Robot Priests can Perform your Funeral

“Robot priests can bless you, advise you, and even perform your funeral” – this is the title of an article published in Vox on 9 September 2019. “A new priest named Mindar is holding forth at Kodaiji, a 400-year-old Buddhist temple in Kyoto, Japan. Like other clergy members, this priest can deliver sermons and move around to interface with worshippers. But Mindar comes with some … unusual traits. A body made of aluminum and silicone, for starters.” (Vox, 9 September 2019) The robot looks like Kannon, the Buddhist deity of mercy. According to Vox, it is an attempt to reignite people’s passion for their faith in a country where religious affiliation is on the decline. “For now, Mindar is not AI-powered. It just recites the same preprogrammed sermon about the Heart Sutra over and over. But the robot’s creators say they plan to give it machine-learning capabilities that’ll enable it to tailor feedback to worshippers’ specific spiritual and ethical problems.” (Vox, 9 September 2019) There is hope that the robot will not bring people back to faith, but rather enthuse them for the knowledge of science – the science that created Mindar.

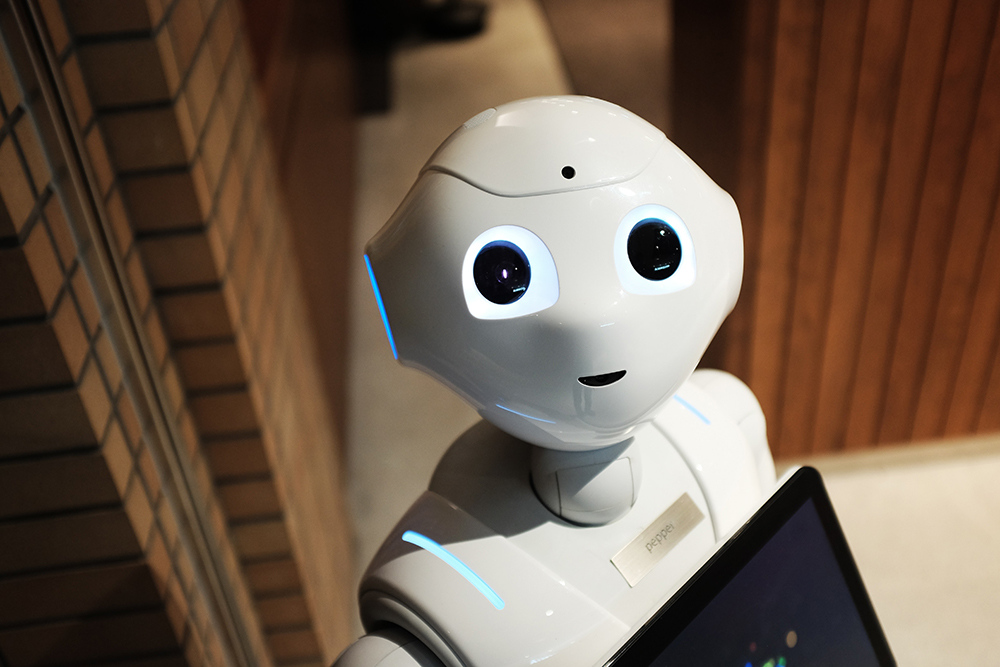

Pepper’s New Job

SoftBank Robotics has announced that it will operate a cafe in Tokyo. The humanoid robot Pepper is to play a major role in this. But people will not disappear. They will of course be guests, but also, as in the traditional establishments of this kind, waitresses and waiters. At least that’s what ZDNET reports. “The cafe, called Pepper Parlor, will utilise both human and robot staff to serve customers, and marks the company’s first time operating a restaurant or cafe.” (ZDNET, 13 September 2019) According to SoftBank Robotics, the aim is “to create a space where people can easily experience the coexistence of people and robots and enjoy the evolution of robots and the future of living with robots”. “We want to make robots not only for convenience and efficiency, but also to expand the possibilities of people and bring happiness.” (ZDNET, 13 September 2019) This opens up new career opportunities for the little robot, which recognizes and shows emotions, and which listens and talks and is programmed to give high-fives. It has long since left its family’s lap, it can be found in shopping malls and nursing homes. Now it will be serving waffles in a cafe in Tokyo.

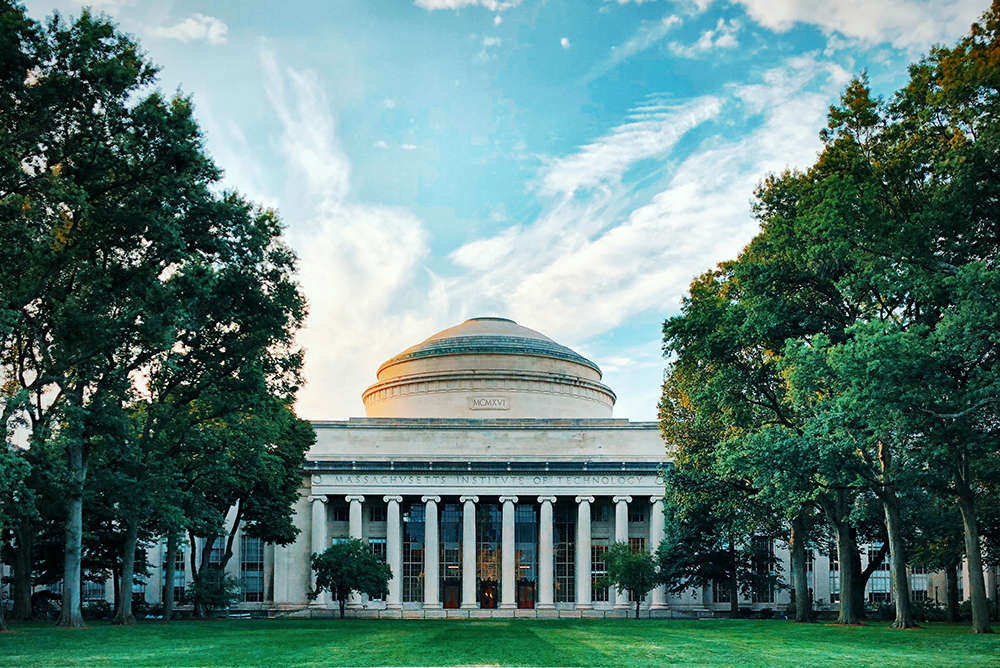

Ethics in AI for Kids and Teens

In summer 2019, Blakeley Payne ran a very special course at MIT. According to an article in Quartz magazine, the graduate student had created an AI ethics curriculum to make kids and teens aware of how AI systems mediate their everyday lives. “By starting early, she hopes the kids will become more conscious of how AI is designed and how it can manipulate them. These lessons also help prepare them for the jobs of the future, and potentially become AI designers rather than just consumers.” (Quartz, 4 September 2019) Not everyone is convinced that artificial intelligence is the right topic for kids and teens. “Some argue that developing kindness, citizenship, or even a foreign language might serve students better than learning AI systems that could be outdated by the time they graduate. But Payne sees middle school as a unique time to start kids understanding the world they live in: it’s around ages 10 to 14 year that kids start to experience higher-level thoughts and deal with complex moral reasoning. And most of them have smartphones loaded with all sorts of AI.” (Quartz, 4 September 2019) There is no doubt that the MIT course could be a role model for schools around the world. The renowned university once again seems to be setting new standards.

Permanent Record

The whistleblower Edward Snowden spoke to the Guardian about his new life and concerns for the future. The reason for the two-hour interview was his book “Permanent Record”, which will be published on 17 September 2019. “In his book, Snowden describes in detail for the first time his background, and what led him to leak details of the secret programmes being run by the US National Security Agency (NSA) and the UK’s secret communication headquarters, GCHQ.” (Guardian, 13 September 2019) According to the Guardian, Snowden said: “The greatest danger still lies ahead, with the refinement of artificial intelligence capabilities, such as facial and pattern recognition.” (Guardian, 13 September 2019) The number of public appearances by and interviews with him is rather manageable. On 7 September 2016, the movie “Snowden” was shown as a preview in the Cinéma Vendôme in Brussels. Jan Philipp Albrecht, Member of the European Parliament, invited Viviane Reding, the Luxembourg politician and journalist, and authors and scientists such as Yvonne Hofstetter and Oliver Bendel. After the preview, Edward Snowden was connected to the participants via videoconferencing for almost three quarters of an hour.

The New Dangers of Face Recognition

The dangers of face recognition are discussed more and more. A new initiative is aimed at banning the use of technology to monitor the American population. The AI Now Institute already warned of the risks in 2018, as did Oliver Bendel. The ethicist had a particular use in mind. In the 21st century, face recognition is increasingly attempted to connect to the pseudoscience of physiognomy, which has its origins in ancient times. From the appearance of persons, a conclusion is drawn to their inner self, and attempts are made to identify character traits, personality traits and temperament, or political and sexual orientation. Biometrics plays a role in this concept. It was founded in the eighteenth century, when physiognomy under the lead of Johann Caspar Lavater had its dubious climax. In his paper “The Uncanny Return of Physiognomy”, Oliver Bendel elaborates the basic principles of this topic; selected projects from research and practice are presented and, from an ethical perspective, the possibilities of face recognition are subjected to fundamental critique in this context, including the above examples. The philosopher presented his paper on 27 March 2018 at Stanford University (“AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents”, AAAI 2018 Spring Symposium Series). The whole volume is available here.

Fighting Deepfakes with Deepfakes

A deepfake (or deep fake) is a picture or video created with the help of artificial intelligence that looks authentic but is not. Also the methods and techniques in this context are labeled with the term. Machine learning and especially deep learning are used. With deepfakes one wants to create objects of art and visual objects or means for discreditation, manipulation and propaganda. Politics and pornography are therefore closely interwoven with the phenomenon. According to Futurism, Facebook is teaming up with a consortium of Microsoft researchers and several prominent universities for a “Deepfake Detection Challenge”. “The idea is to build a data set, with the help of human user input, that’ll help neural networks detect what is and isn’t a deepfake. The end result, if all goes well, will be a system that can reliably fake videos online. Similar data sets already exist for object or speech recognition, but there isn’t one specifically made for detecting deepfakes yet.” (Futurism, 5 September 2019) The winning team will get a prize – presumably a higher sum of money. Facebook is investing a total of 10 million dollars in the competition.