The title of one of the AAAI 2019 Spring Symposia was “Interpretable AI for Well-Being: Understanding Cognitive Bias and Social Embeddedness”. An important keyword here is “social embeddedness”. Social embeddedness of AI includes issues like “AI and future economics (such as basic income, impact of AI on GDP)” or “well-being society (such as happiness of citizen, life quality)”. In his paper “Are Robot Tax, Basic Income or Basic Property Solutions to the Social Problems of Automation?”, Oliver Bendel discusses and criticizes these approaches in the context of automation and digitization. Moreover, he develops a relatively unknown proposal, unconditional basic property, and presents its potentials as well as its risks. The lecture by Oliver Bendel took place on 26 March 2019 at Stanford University and led to lively discussions. It was nominated for the “best presentation”. The paper has now been published as a preprint and can be downloaded here.

Development of a Morality Menu

Machine ethics produces moral and immoral machines. The morality is usually fixed, e.g. by programmed meta-rules and rules. The machine is thus capable of certain actions, not others. However, another approach is the morality menu (MOME for short). With this, the owner or user transfers his or her own morality onto the machine. The machine behaves in the same way as he or she would behave, in detail. Together with his teams, Prof. Dr. Oliver Bendel developed several artifacts of machine ethics at his university from 2013 to 2018. For one of them, he designed a morality menu that has not yet been implemented. Another concept exists for a virtual assistant that can make reservations and orders for its owner more or less independently. In the article “The Morality Menu” the author introduces the idea of the morality menu in the context of two concrete machines. Then he discusses advantages and disadvantages and presents possibilities for improvement. A morality menu can be a valuable extension for certain moral machines. You can download the article here. In 2019, a morality menu for a robot will be developed at the School of Business FHNW.

Deceptive Machines

“AI has definitively beaten humans at another of our favorite games. A poker bot, designed by researchers from Facebook’s AI lab and Carnegie Mellon University, has bested some of the world’s top players …” (The Verge, 11 July 2019) According to the magazine, Pluribus was remarkably good at bluffing its opponents. The Wall Street Journal reported: “A new artificial intelligence program is so advanced at a key human skill – deception – that it wiped out five human poker players with one lousy hand.” (Wall Street Journal, 11 July 2019) Of course you don’t have to equate bluffing with cheating – but in this context interesting scientific questions arise. At the conference “Machine Ethics and Machine Law” in 2016 in Krakow, Ronald C. Arkin, Oliver Bendel, Jaap Hage, and Mojca Plesnicar discussed on the panel the question: “Should we develop robots that deceive?” Ron Arkin (who is in military research) and Oliver Bendel (who is not) came to the conclusion that we should – but they had very different arguments. The ethicist from Zurich, inventor of the LIEBOT, advocates free, independent research in which problematic and deceptive machines are also developed, in favour of an important gain in knowledge – but is committed to regulating the areas of application (for example dating portals or military operations). Further information about Pluribus can be found in the paper itself, entitled “Superhuman AI for multiplayer poker”.

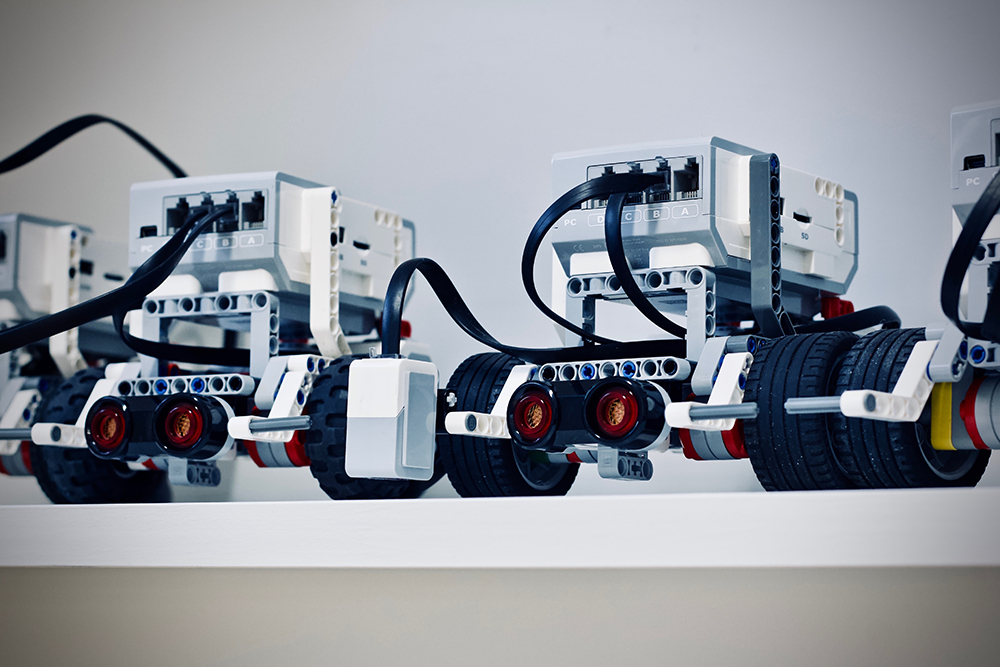

Artifacts of Machine Ethics

More and more autonomous and semi-autonomous machines such as intelligent software agents, specific robots, specific drones and self-driving cars make decisions that have moral implications. Machine ethics as a discipline examines the possibilities and limits of moral and immoral machines. It does not only reflect ideas but develops artifacts like simulations and prototypes. In his talk at the University of Potsdam on 23 June 2019 (“Fundamentals and Artifacts of Machine Ethics”), Prof. Dr. Oliver Bendel outlined the fundamentals of machine ethics and present selected artifacts of moral and immoral machines. Furthermore, he discussed a project which will be completed by the end of 2019. The GOODBOT (2013) is a chatbot that responds morally adequate to problems of the users. The LIEBOT (2016) can lie systematically, using seven different strategies. LADYBIRD (2017) is an animal-friendly robot vacuum cleaner that spares ladybirds and other insects. The BESTBOT (2018) is a chatbot that recognizes certain problems and conditions of the users with the help of text analysis and facial recognition and reacts morally to them. 2019 is the year of the E-MOMA. The machine should be able to improve its morality on its own.

Happy Hedgehog

Between June 2019 and January 2020, the sixth artifact of machine ethics will be created at the FHNW School of Business. Prof. Dr. Oliver Bendel is the initiator, the client and – together with a colleague – the supervisor of the project. Animal-machine interaction is about the design, evaluation and implementation of (usually more sophisticated or complex) machines and computer systems with which animals interact and communicate and which interact and communicate with animals. While machine ethics has largely focused on humans thus far, it can also prove beneficial for animals. It attempts to conceive moral machines and to implement them with the help of further disciplines such as computer science and AI or robotics. The aim of the project is the detailed description and prototypical implementation of an animal-friendly service robot, more precisely a mowing robot called HAPPY HEDGEHOG (HHH). With the help of sensors and moral rules, the robot should be able to recognize hedgehogs (especially young animals) and initiate appropriate measures (interruption of work, expulsion of the hedgehog, information of the owner). The project has similarities with another project carried out earlier, namely LADYBIRD. This time, however, more emphasis will be placed on existing equipment, platforms and software. The first artifact at the university was the GOODBOT – in 2013.

No Solution to the Nursing Crisis

“In Germany, around four million people will be dependent on care and nursing in 2030. Already today there is talk of a nursing crisis, which is likely to intensify further in view of demographic developments in the coming years. Fewer and fewer young people will be available to the labour market as potential carers for the elderly. Experts estimate that there will be a shortage of around half a million nursing staff in Germany by 2030. Given these dramatic forecasts, are nursing robots possibly the solution to the problem? Scientists from the disciplines of computer science, robotics, medicine, nursing science, social psychology, and philosophy explored this question at a Berlin conference of the Daimler and Benz Foundation. The machine ethicist and conference leader Professor Oliver Bendel first of all stated that many people had completely wrong ideas about care robots: ‘In the media there are often pictures or illustrations that do not correspond to reality’.” (Die Welt, 14 June 2019) With these words an article in the German newspaper Die Welt begins. Norbert Lossau describes the Berlin Colloquium, which took place on 22 May 2019, in detail. The article is available in English and German. So are robots a solution to the nursing crisis? Oliver Bendel denies this. They can be useful for the caregiver and the patient. But they don’t solve the big problems.

AI Award for the Social Good

The Association for the Advancement of Artificial Intelligence (AAAI) and Squirrel AI Learning announced the establishment of a new one million dollars annual award for societal benefits of AI. According to a press release of the AAAI, the award will be sponsored by Squirrel AI Learning as part of its mission to promote the use of artificial intelligence with lasting positive effects for society. “This new international award will recognize significant contributions in the field of artificial intelligence with profound societal impact that have generated otherwise unattainable value for humanity. The award nomination and selection process will be designed by a committee led by AAAI that will include representatives from international organizations with relevant expertise that will be designated by Squirrel AI Learning.” (AAAI Press Release, 28 May 2019) The AAAI Spring Symposia have repeatedly devoted themselves to social good, also from the perspective of machine ethics. Further information via aaai.org/Pressroom/Releases//release-19-0528.php.

How to Treat Animals

Parallel to his work in machine ethics, Oliver Bendel is trying to establish animal-machine interaction (AMI) as a discipline. He was very impressed by Clara Mancini’s paper “Animal-Computer Interaction (ACI): A Manifesto” on animal-computer interaction. In his AMI research, he mainly investigates robots, gadgets, and devices and their behavior towards animals. There are not only moral questions, but also questions concerning the design of outer appearance and the ability to speak. The general background for his considerations is that more and more machines and animals meet in closed, half-open and open worlds. He believes that semi-autonomous and autonomous systems should have rules so that they treat animals well. They should not disturb, frighten, injure or kill them. Examples are toy robots, domestic robots, service robots in shopping malls and agricultural robots. Jackie Snow, who writes for New York Times, National Geographic, and Wall Street Journal, has talked to several experts about the topic. In an article for Fast Company, she quotes the ethicists Oliver Bendel and Peter Singer. Clara Mancini is also expressing her point of view. The article with the title “AI’s next ethical challenge: how to treat animals” can be downloaded here.

Hologram Girl

The article “Hologram Girl” by Oliver Bendel deals first of all with the current and future technical possibilities of projecting three-dimensional human shapes into space or into vessels. Then examples for holograms from literature and film are mentioned, from the fictionality of past and present. Furthermore, the reality of the present and the future of holograms is included, i.e. what technicians and scientists all over the world are trying to achieve, in eager efforts to close the enormous gap between the imagined and the actual. A very specific aspect is of interest here, namely the idea that holograms serve us as objects of desire, that they step alongside love dolls and sex robots and support us in some way. Different aspects of fictional and real holograms are analyzed, namely pictoriality, corporeality, motion, size, beauty and speech capacity. There are indications that three-dimensional human shapes could be considered as partners, albeit in a very specific sense. The genuine advantages and disadvantages need to be investigated further, and a theory of holograms in love could be developed. The article is part of the book “AI Love You” by Yuefang Zhou and Martin H. Fischer and was published on 18 July 2019. Further information can be found via link.springer.com/book/10.1007/978-3-030-19734-6.

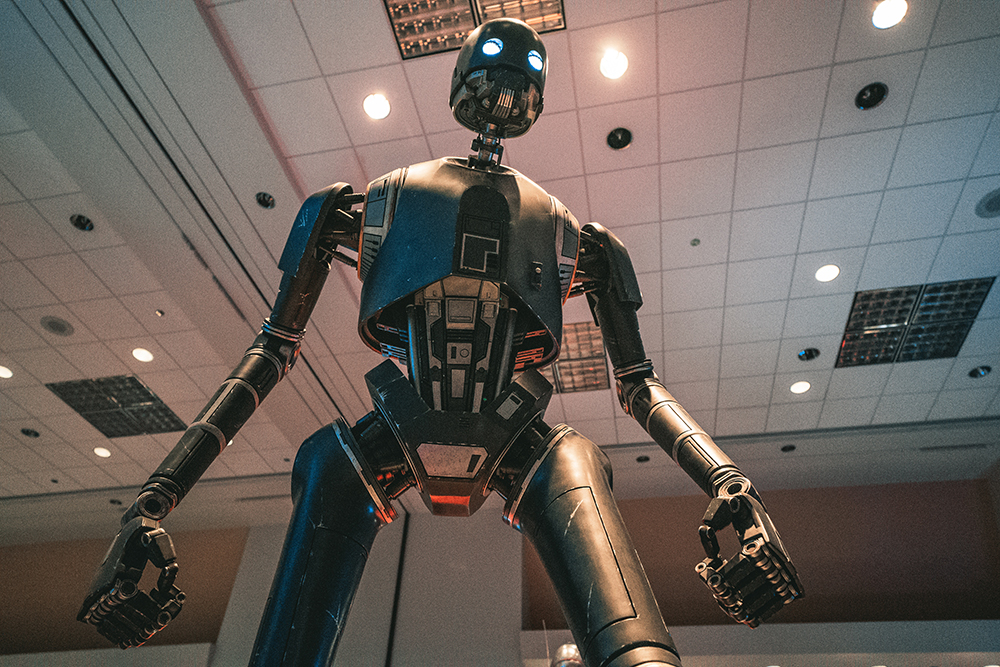

About Robophilosophy

Robophilosophy or robot philosophy is a field of philosophy that deals with robots (hardware and software robots) as well as with enhancement options such as artificial intelligence. It is not only about the reality and history of development, but also the history of ideas, starting with the works of Homer and Ovid up to science fiction books and movies. Disciplines such as epistemology, ontology, aesthetics and ethics, including information and machine ethics, are involved. The website robophilosophy.com or robophilosophy.net is operated by Oliver Bendel, a robot philosopher who lives and works in Switzerland. Guest contributions are welcome. They should be exclusively scientific and should not advertise for companies.