The kick-off meeting for the VISUAL project took place on March 20, 2025. It was initiated by Prof. Dr. Oliver Bendel from the FHNW School of Business. “VISUAL” stands for “Virtual Inclusive Safaris for Unique Adventures and Learning”. There are webcams all over the world that show wild animals. Sighted people can use them to go on a photo safari from the comfort of their sofa. Blind and visually impaired people are at a disadvantage. As part of Inclusive AI – an approach and a movement that also includes apps such as Be My Eyes with the Be My AI function – a solution is to be found for them. The aim of the project is to develop a prototype by August 2025 that enables blind and visually impaired people to have webcam images or videos of wild animals described to them. The system analyzes and evaluates them with the help of a multimodal LLM. It presents the results in spoken language via an integrated text-to-speech engine. As a by-product, poaching, bush and forest fires and other events can be detected. The project is likely to be one of the first to combine inclusive AI with new approaches to animal-computer interaction (ACI). Doris Jovic, who is completing her degree in BIT, has been recruited to work on the project.

Malicious Attacks Against Autonomous Vehicles

In their paper “Malicious Attacks against Multi-Sensor Fusion in Autonomous Driving”, the authors present the first study on the vulnerability of multi-sensor fusion systems using LiDAR, camera, and radar. “Specifically, we propose a novel attack method that can simultaneously attack all three types of sensing modalities using a single type of adversarial object. The adversarial object can be easily fabricated at low cost, and the proposed attack can be easily performed with high stealthiness and flexibility in practice. Extensive experiments based on a real-world AV testbed show that the proposed attack can continuously hide a target vehicle from the perception system of a victim AV using only two small adversarial objects.” (Abstract) In an article for ICTkommunikation on 6 March 2024, Oliver Bendel presented ten ways in which sensors can be attacked from the outside. On his behalf, M. Hashem Birahjakli investigated further possible attacks on self-driving cars as part of his final thesis in 2020. ‘The results of the work suggest that every 14-year-old girl could disable a self-driving car.’ Boys too, of course, if they have comparable equipment with them (Image: Ideogram).

AAAI Spring Symposia Return to their Roots

The AAAI Spring Symposium Series will be held at Stanford University on March 25-27, 2024. The symposium co-chairs are Christopher Geib (SIFT, USA) and Ron Petrick (Heriot-Watt University, UK). Since 2019, the Association for the Advancement of Artificial Intelligence has not published the proceedings itself, but left this to the organizers of the individual symposia. This had unfortunate consequences. For example, some organizers did not publish the proceedings at all, and the scientists did not know this in advance. This, in turn, had consequences for the funding of travel and fees, since many universities will only pay for their members to attend conferences if they are linked to a publication. This major flaw, which damaged the prestigious conference, was fixed in 2024. As of this year, AAAI once again offers centralized publication, along with an excellent quality assurance process. Over the past ten years, the AAAI Spring Symposia have been relevant not only to classical AI, but also to roboethics and machine ethics. Groundbreaking symposia were, for example, “Ethical and Moral Considerations in Non-Human Agents” in 2016, “AI for Social Good” in 2017, or “AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents” in 2018. In 2024, you can look forward to the “Impact of GenAI on Social and Individual Well-being” symposium, which will focus on an app for the blind and GPTs as virtual learning companions (VLCs), among other things. More information is available at aaai.org/conference/spring-symposia/sss24/ (Image: DALL-E 3).

Tenderness in Digitality

The book “Tender Digitality”, edited by Charlotte Axelsson from the Zurich University of the Arts, will be published in early February 2024. From the publisher’s website: “Tender Digitality introduces an aesthetically oriented concept that intricately intertwines binary systems in a complex manner, setting them in motion. The concept responds to the human desire for sensuality, interpersonal connection, intuition, and well-being in digital environments. It explores ways to (re)transmit these experiences into the analog world of a book, developing its own distinct vocabularies. Readers are guided through the various perspectives of this kaleidoscope, encouraging them to delve into different play-forms of ‘tender digitality’ and develop their own approach. Assuming the role of researchers, they discover phenomena of extraordinary beauty or bizarreness within the spectrum spanning analogue and digital, social, and technological domains. Through exploration, learning, and the cultivation of ‘tender digitality,’ readers can envision their own version of a community where individuals and artificial intelligences, avatars and cyborgs, humans and computers navigate digital landscapes with agency, intuition, and sensitivity.” (Website Slanted) Contributors include Charlotte Axelsson, Oliver Bendel, Dana Blume, Marisa Burn, Alexander Damianisch, Léa Ermuth, Hannah Eßler, Barbara Getto, Leoni Hof, Marcial Koch, Mela Kocher, Friederike Lampert, Gunter Lösel, Francis Müller, Marie-France Rafael, Oliver Ruf, Sascha Schneider, and Grit Wolany. More information at www.slanted.de/product/tender-digitality/ (Photo: © ZHdK).

A “weak” Robot

How do social robots emerge from simple, soft shapes? As part of their final thesis in 2021 at the School of Business FHNW, 23-year-old students Nhi Tran Hoang Yen and Thang Hoang Vu from Ho Chi Minh City (Vietnam) have answered this question posed by their supervisor Prof. Dr. Oliver Bendel. They have submitted eleven proposals for novel robots. The first is a pillow to which a tail has been added. Its name is Petanion, a portmanteau of “pet” and “companion”. The tail could move like the tail of a cat or dog. In addition, the pillow could make certain sounds. It would be optimal if the tail movements were based on the behavior of the user. Thus, as desired, a social robot is created from a simple, soft form, in this case a pet substitute. Petanion is soft and cute and survives a long time. It can also be used if one has certain allergies or if there is not enough space or money in a household for a pet. Last but not least, the ecological balance is probably better – above all, the robot does not eat animals that come from factory farming. The inspiration may have been Qoobo, a pillow with a tail, designed to calm and to “heal the heart”. Panasonic also believes in robots that emerge from simple, soft forms. It promotes its new robot NICOBO as a “yowai robotto”, a “weak” robot that has hardly any functions or capabilities. The round, cute robot has two separate displays as eyes and a tail that it constantly moves. According to the company, it is aimed primarily at singles and the elderly. There could well be a high demand for it, even beyond the target groups (Photo: Panasonic).

A Slime Mold in a Smartwatch

Jasmine Lu and Pedro Lopes of the University of Chicago published a paper in late 2022 describing the integration of an organism – the single-celled slime mold Physarum Polycephalum – into a wearable. From the abstract: “Researchers have been exploring how incorporating care-based interactions can change the user’s attitude & relationship towards an interactive device. This is typically achieved through virtual care where users care for digital entities. In this paper, we explore this concept further by investigating how physical care for a living organism, embedded as a functional component of an interactive device, also changes user-device relationships. Living organisms differ as they require an environment conducive to life, which in our concept, the user is responsible for providing by caring for the organism (e.g., feeding it). We instantiated our concept by engineering a smartwatch that includes a slime mold that physically conducts power to a heart rate sensor inside the device, acting as a living wire. In this smartwatch, the availability of heart-rate sensing depends on the health of the slime mold – with the user’s care, the slime mold becomes conductive and enables the sensor; conversely, without care, the slime mold dries and disables the sensor (resuming care resuscitates the slime mold).” (Lu and Lopes 2022) The paper “Integrating Living Organisms in Devices to Implement Care-based Interactions” can be downloaded here.

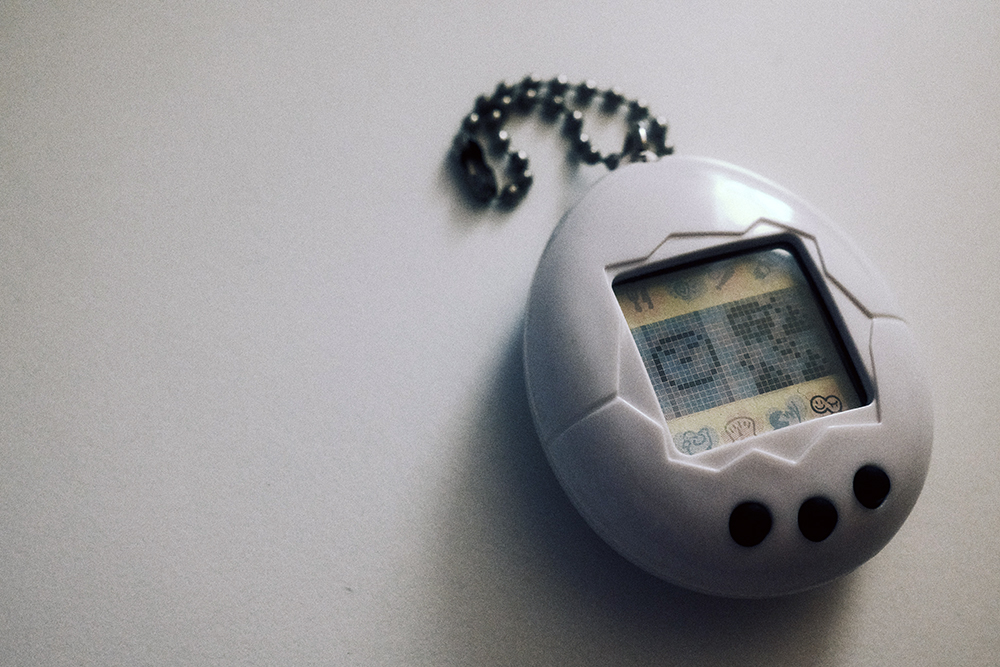

Proceedings of Robophilosophy 2022

In January 2023, the proceedings of Robophilosophy 2022 were published, under the title “Social Robots in Social Institutions”. “This book presents the Proceedings of Robophilosophy 2022, the 5th event in the biennial Robophilosophy conference series, held in Helsinki, Finland, from 16 to 19 August 2022. The theme of this edition of the conference was Social Robots in Social Institutions, and it featured international multidisciplinary research from the humanities, social sciences, Human-Robot Interaction, and social robotics. The 63 papers, 41 workshop papers and 5 posters included in this book are divided into 4 sections: plenaries, sessions, workshops and posters, with the 41 papers in the ‘Sessions’ section grouped into 13 subdivisions including elderly care, healthcare, law, education and art, as well as ethics and religion. These papers explore the anticipated conceptual and practical changes which will come about in the course of introducing social robotics into public and private institutions, such as public services, legal systems, social and healthcare services, or educational institutions.” (Website IOS Press) The proceedings contain the paper “Robots in Policing” by Oliver Bendel and the poster “Tamagotchi on our couch: Are social robots perceived as pets?” by Katharina Kühne, Melinda A. Jeglinski-Mende, and Oliver Bendel. More information via www.iospress.com/catalog/books/social-robots-in-social-institutions.

Metaverse Exhibition at Biennale Arte 2022

The 59th International Art Exhibition (Biennale Arte 2022) runs from 23 April to 27 November 2022. The main venues of the Biennale are Giardini and Arsenale. In the northern part of Arsenale, visitors will find many rooms with screens showing art projects around and from the Metaverse. The “1st Annual METAVERSE Art @VENICE”, an exhibition conceived by Victoria Lu, a famous art critic and curator of the Chinese artistic world, opened with gEnki, an interactive exhibition that exploits the potential of the Internet and virtual reality. “The exhibition offers a new model of ‘decentralized autonomous curatorship’, exploring various innovative and always different exhibition methods using all the main features of the ‘DAO’ (short for Distributed Autonomous Organization), therefore crowdfunding, co-construction, interconnection of platforms, high level of decentralization, all adapted to the curational concept.” (Flyer gEnki) The exhibition is very inspiring and shows how the Metaverse could become if it would not only follow commercial considerations. More information via annualmetaverseart.com.

Progress with the Starline Project

Google has given an update on its Starline project in a blog post dated October 11, 2022. With this system, two people can hold a video conference in which the respective conversation partner is displayed as a 3D projection – a quasi-hologram. A display based on light field technology is used for this purpose. Several high-resolution cameras film the participants in real time. The company describes this process in a very non-technical or poetic way: “The technology works like a magic window, where users can talk, gesture and make eye contact with another person, life-size and in three dimensions. It is made possible through major research advances across machine learning, computer vision, spatial audio and light field display systems.” (Company News, 11 October 2022) The company hopes to improve hybrid working with Starline. Indeed, quasi-holograms would be interesting not only for concerts, but also for companies that allow and encourage homeworking or that are highly networked and distributed.

Tamagotchi on Our Couch

On the first day (August 16, 2022) of the Robophilosophy conference, Katharina Kühne (University of Potsdam) presented a poster on a project she had carried out together with Melinda A. Jeglinski-Mende from the same university. Oliver Bendel (School of Business FHNW) was also involved in the margins. The paper is titled “Tamagotchi on our couch: Are social robots perceived as pets?”. The abstract states: “Although social robots increasingly enter our lives, it is not clear how they are perceived. Previous research indicates that there is a tendency to anthropomorphize social robots, at least in the Western culture. One of the most promising roles of robots in our society is companionship. Pets also fulfill this role, which gives their owners health and wellbeing benefits. In our study, we investigated if social robots can implicitly and explicitly be perceived as pets. In an online experiment, we measured implicit associations between pets and robots using pictures of robots and devices, as well as attributes denoting pet and non-pet features, in a Go/No-Go Association Task (GNAT). Further, we asked our participants to explicitly evaluate to what extent they perceive robots as pets and if robots could replace a real pet. Our findings show that implicitly, but not explicitly, social robots are perceived as pets.” (Abstract) The poster is available here.