The “Proceedings of the International Conference on Animal-Computer Interaction 2024” were published at the end of November 2024, a few days before the conference in Glasgow. The following papers received awards: “Wireless Tension Sensors for Characterizing Dog Frailty in Veterinary Settings” by Colt Nichols (North Carolina State University), Yifan Wu (North Carolina State University), Alper Bozkurt, David Roberts (North Carolina State University) and Margaret Gruen (North Carolina State University): Best Paper Award; “Communication Functions in Speech Board Use by a Goffin’s Cockatoo: Implications for Research and Design” by Jennifer Cunha (Indiana University), Corinne Renguette (Perdue University), Lily Stella (Indiana University) and Clara Mancini (The Open University): Honourable Mention Award; “Surveying The Extent of Demographic Reporting of Animal Participants in ACI Research” by Lena Ashooh (Harvard University), Ilyena Hirskyj-Douglas (University of Glasgow) and Rebecca Kleinberger (Northeastern University): Honourable Mention Award; “Shelling Out the Fun: Quantifying Otter Interactions with Instrumented Enrichment Objects” by Charles Ramey (Georgia Institute of Technology), Jason Jones (Georgia Aquarium), Kristen Hannigan (Georgia Aquarium), Elizabeth Sadtler (Georgia Aquarium), Jennifer Odell (Georgia Aquarium), Thad Starner (Georgia Institute of Technology) and Melody Jackson (Georgia Institute of Technology): Best Short Paper Award; “The Animal Whisperer Project” by Oliver Bendel (FHNW School of Business) and Nick Zbinden (FHNW School of Business): Honourable Mention Short Paper Award.

Human Stuff from AI Characters

“Left to their own devices, an army of AI characters didn’t just survive – they thrived. They developed in-game jobs, shared memes, voted on tax reforms and even spread a religion.” (MIT Technology Review, 27 November 2024) This was reported by MIT Technology Review in an article on November 27. “The experiment played out on the open-world gaming platform Minecraft, where up to 1000 software agents at a time used large language models (LLMs) to interact with one another. Given just a nudge through text prompting, they developed a remarkable range of personality traits, preferences and specialist roles, with no further inputs from their human creators.” (MIT Technology Review, 27 November 2024) According to the magazine, the work by AI startup Altera is part of a broader field that seeks to use simulated agents to model how human groups would react to new economic policies or other interventions. The article entitled “These AI Minecraft characters did weirdly human stuff all on their own” can be accessed here.

Grandma Daisy Tricks Phone Scammers

“Human-like AIs have brought plenty of justifiable concerns about their ability to replace human workers, but a company is turning the tech against one of humanity’s biggest scourges: phone scammers. The AI imitates the criminals’ most popular target, a senior citizen, who keeps the fraudsters on the phone as long as possible in conversations that go nowhere, à la Grandpa Simpson.” (Techspot, 14 November 2024) This is reported by Techspot in an article from November 14, 2024. In this case, an AI grandmother is sicced on the fraudsters. “The creation of O2, the UK’s largest mobile network operator, Daisy, or dAIsy, is an AI created to trick scammers into thinking they are talking to a real grandmother who likes to ramble. If and when the AI does hand over the demanded bank details, it reads out fake numbers and names.” (Techspot, 14 November 2024) Daisy works by listening to a caller and transcribing his or her voice to text. Responses are generated by a large language model (LLM), complete with character personality layer, and then fed back through a custom AI text-to-speech model to generate a voice response. All this happens in real time, as the magazine reports. As phone scammers use more and more AI in their calls, you will soon find AI systems trying to outsmart each other.

The kAIxo Project

The interim presentation of the kAIxo project took place on 11 November 2024. Nicolas Lluis Araya is the project collaborator. Chatbots for dead, endangered, and extinct languages are being developed at the FHNW School of Business. One well-known example is @llegra, a chatbot for Vallader. Oliver Bendel recently tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material available for them. On 12 May 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the “kAIxo” project (the Basque “kaixo” corresponds to the English “hello”), the chatbot or voice assistant kAIxo is being built to speak Basque. Its purpose is to keep users practising written or spoken language or to develop the desire to learn the endangered language. The chatbot is based on GPT-4o. Retrieval-Augmented Generation (RAG) plays a central role. A ChatSubs dataset is used, which contains dialogues in Spanish and three other official Spanish languages (Catalan, Basque, and Galician). Nicolas Lluis Araya presented a functioning prototype at the interim presentation. This is now to be expanded step by step.

A New School of Computer Science

All four cantons supporting the FHNW approved the global budget of CHF 204.7 million in September and October 2024. This also clears the way for the new Hochschule für Informatik FHNW (assumed name FHNW School of Computer Science) with a main location in Brugg-Windisch and a secondary location north of the Jura (Basel-Landschaft and Basel-Stadt). It is due to be founded at the beginning of 2025 and will begin its studies in fall 2025. From this date, it will take over the existing computer science courses at the FHNW School of Engineering. During the 2025 – 2028 performance mandate period, the plan is to establish further education and training courses and develop research and development activities. The aim of the new university is to educate and train the IT specialists required by business and administration in Northwestern Switzerland. The degree programs and courses offered by the FHNW School of Business in business information technology, artificial intelligence, and robotics are not affected by this change. The FHNW School of Computer Science will be the tenth university under the umbrella of the University of Applied Sciences and Arts Northwestern Switzerland (Photo: Pati Grabowicz).

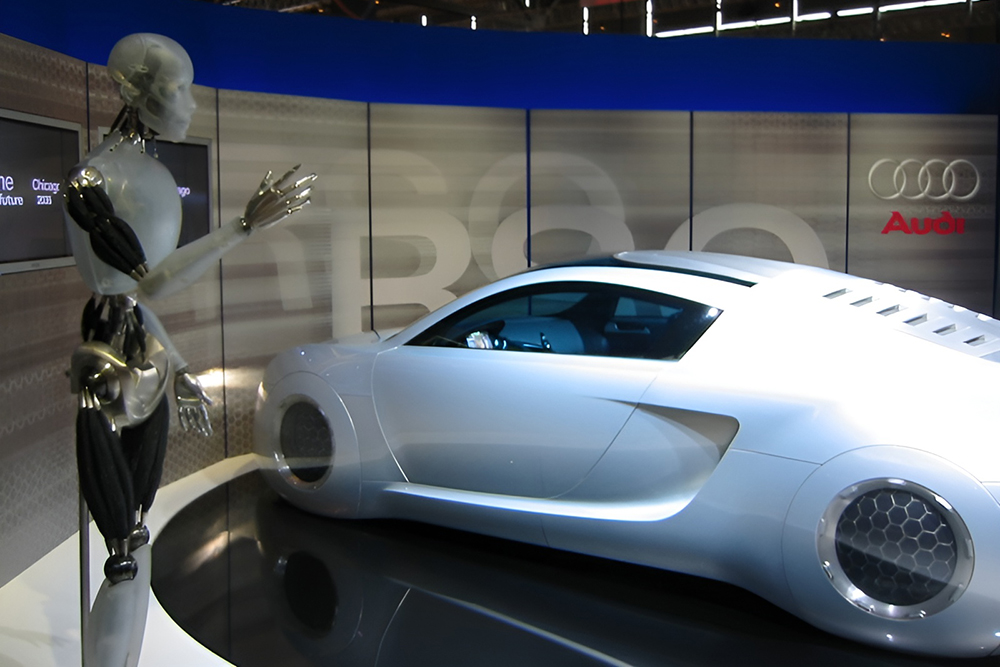

Back to the Future

Elon Musk presented the prototypes of his new Cybercab and his new Robovan in October 2024. In this context, he once again said: “The future should look like the future.” (TechCrunch, 10 October 2024) This is an astonishing statement, because if you know a little about the history of robot and vehicle construction, you know that Elon Musk is orientating himself on ideas that were popular 20 to 80 years ago. Brass, copper, silver, gold, and large, matt or polished surfaces – reminiscent of Elektro (1939) and his animal companion Sparko (1940) as well as futuristic vehicles such as Gil Spear’s Chrysler two-seater (1941). Science fiction and fantasy are also likely to play a role in the design of Tesla and co – think of steampunk and cyberpunk in general, and think of movies like “Metropolis” (1927) and “I, Robot” (2004). Elon Musk generally likes to mix ideas from fiction in his developments, for example the large language model called Grok, which takes its name from “Stranger in a Strange Land” and is intended to fulfil claims formulated in “The Hitchhiker’s Guide to the Galaxy”. TechCrunch also points out the backward-looking nature of the Robovan: “The Robovan has a retro-futuristic look – somewhere between a bus from The Jetsons and a toaster from the 1950s. It features silver metallic sides with black details, and strips of light run-ning parallel to the ground along its sides, with doors that slide out from the middle.” (TechCrunch, 10 October 2024) Robots and robotic vehicles could look very different in the 2020s (Photo: Eirik Newth; cropped by Robophilosophy).

The Animal Whisperer at ACI 2024

The paper “The Animal Whisperer Project” by Oliver Bendel and Nick Zbinden will be presented at ACI 2024, which takes place in Glasgow this December. It is a conference that brings together the small community of experts in animal-computer interaction and animal-machine interaction. This includes Oliver Bendel, who has been researching in this field since 2012, with a background in animal ethics from the 1980s and 1990s. He initiated the Animal Whisperer project. The developer was Nick Zbinden, who graduated from the FHNW School of Business. From March 2024, three apps were created on the basis of GPT-4: the Cow Whisperer, the Horse Whisperer, and the Dog Whisperer. They can be used to analyze the body language, behaviour, and environment of cows, horses, and dogs. The aim is to avert danger to humans and animals. For example, a hiker can receive a recommendation on his or her smartphone not to cross a pasture if a mother cow and her calves are present. All he or she has to do is call up the application and take photos of the surroundings. The three apps are available as prototypes since August 2024. With the help of prompt engineering and retrieval-augmented generation (RAG), they have been given extensive knowledge and skills. Above all, self-created and labeled photos were used. In the majority of cases, the apps correctly describe the animals’ body language and behavior. Their recommendations for human behavior are also adequate (Image: DALL-E 3).

Can and Should We Use Robots in Prisons?

Tamara Siegmann and Prof. Dr. Oliver Bendel carried out the “Robots in Prison” project in June and July 2024. The student, who is studying business administration at the FHNW School of Business, came up with the idea after taking an elective module on social robots with Oliver Bendel. In his paper “Love Dolls and Sex Robots in Unproven and Unexplored Fields of Application”, the philosopher of technology had already made a connection between robots and prisons, but had not systematically investigated this. They did this together with the help of expert interviews with the intercantonal commissioner for digitalization, several prison directors and employees as well as inmates. The result was the paper “Social and Collaborative Robots in Prison”, which was submitted to the ICSR 2024. The International Conference on Social Robotics is the most important conference for social robotics alongside Robophilosophy. The paper was accepted in September 2024 after a revision of the methods section, which was made more transparent and extensive and linked to a directory on GitHub. This year’s conference will take place in Odense (Denmark) from October 23 to 26. Last year it was held in Doha (Qatar) and the year before last in Florence (Italy).

AI Ethics at the FHNW

Prof. Dr. Oliver Bendel has been teaching information ethics, AI ethics, robot ethics, and machine ethics at the FHNW for around 15 years. He is responsible for the “Ethik und Technologiefolgenabschätzung” (“Ethics and Technology Assessment”) module in the new Business AI degree program at the FHNW School of Business in Olten. Here, the focus is on AI ethics, but students will also learn about robot ethics and machine ethics approaches – including annotated decision trees and moral prompt engineering. And they will use information ethics, including data ethics, to analyze and evaluate the origins and flows of data and information and engage in bias discussions. Last but not least, they will delve into technology assessment. Oliver Bendel also teaches the “Ethik und Recht” (“Ethics and Law”) module in the Business Information Systems degree program at the FHNW School of Business in Olten (which he took over in 2010 as “Informatik, Ethik und Gesellschaft”, later renamed “Informationsethik”), the “Recht und Ethik” (“Law and Ethics”) module in the Geomatics degree program at the FHNW School of Architecture, Construction and Geomatics in Muttenz, and “Ethisches Reflektieren” (“Ethical Reflecting”) and “Ethisches Implementieren” (“Ethical Implementing”) in the Data Science degree program at the FHNW School of Engineering in Brugg-Windisch. His elective modules on social robotics are very popular (Photo: Pati Grabowicz).

Start of the kAIxo Project

Chatbots for dead, endangered, and extinct languages are being developed at the FHNW School of Business. One well-known example is @llegra, a chatbot for Vallader. Oliver Bendel recently tested the reach of GPTs for endangered languages such as Irish (Irish Gaelic), Maori, and Basque. According to ChatGPT, there is a relatively large amount of training material for them. On May 12, 2024 – after Irish Girl and Maori Girl – a first version of Adelina, a chatbot for Basque, was created. It was later improved in a second version. As part of the kAIxo project (the Basque “kaixo” corresponds to the english “hello”), the chatbot or voice assistant kAIxo is to be developed that speaks Basque. The purpose is to keep users practicing written or spoken language or to develop the desire to learn the endangered language. The chatbot should be based on a Large Language Model (LLM). Both prompt engineering and fine-tuning are conceivable for customization. Retrieval Augmented Generation (RAG) can play a central role. The result will be a functioning prototype. Nicolas Lluis Araya, a student of business informatics, has been recruited to implement the project. The kick-off meeting will take place on September 3, 2024.