The 18th International Conference on Social Robotics (ICSR + Art 2026) will take place in London, UK, from 1–4 July 2026. ICSR is the leading international forum that brings together researchers, academics, and industry professionals from across disciplines to advance the field of social robotics. As part of the conference program, Robot Fringe 2026 offers a dedicated platform for experimental, daring, and imaginative ideas, presented on a performance stage within the supportive and inclusive environment of ICSR+ART 2026 (icsr2026.uk/robot-fringe/). Drawing inspiration from the Edinburgh Festival Fringe – the world’s largest performing arts festival and the origin of a global tradition of fringe and off-festivals celebrating unconventional and small-scale performances (www.edfringe.com) – Robot Fringe embraces creative risk-taking and non-traditional formats across artistic and technological practices. The variety show will take place on the evening of Thursday 2 July at Senate House in London and runs in conjunction with ICSR+ART 2026. The program is curated and hosted by researcher-comedians Heather Knight and Piotr Mirowski. Further information and submission details are available via the ICSR submission page (icsr2026.uk/submission/) and the Robot Fringe website (www.robotfringe.com).

The First Lunar Hotel

The U.S.-based company GRU Space describes on its website a project called GRU (Galactic Resource Utilization), which aims to establish a series of permanent structures on the Moon. The planned centerpiece of the initiative is a hotel, the “First Lunar Hotel”. The project does not present itself as conventional space tourism – likely also to avoid related criticism – but rather as an early phase of a long-term human presence beyond Earth. GRU targets a small group of participants who are willing to become involved in the construction process at an early stage. The process begins with an application procedure that requires a non-refundable fee. If accepted into the program, a substantial deposit is required, which will later be credited toward the total cost. Final pricing has not yet been set but is expected to exceed 10 million U.S. dollars. In addition, medical, personal, and financial screenings are planned. Applications are to be reviewed starting in 2026, with an initial lunar mission for technical preparation scheduled for 2029. From 2031 onward, habitats such as the Lunar Cave Base are to be installed and training activities are to begin. Subsequently, the first hotel on the Moon is expected to begin operations – according to the timeline on the website, as early as 2032. In illustrations, the structure oscillates between ancient temples, Palladian villas, and Swiss grand hotels as imagined by American politicians. Whether the project will materialize remains uncertain, not only because of the high upfront payment required, but also due to the technical challenges and environmental implications. Further information is available at www.gru.space/reserve (Image: Lunar Cave Base, based on an illustration by GRU Space, generated with GPT Image).

Quo Vadis, CYBATHLON?

Ten years ago, the CYBATHLON was held for the first time. It marked the beginning of a fascinating and inspiring project centered on inclusive AI and inclusive robotics. The competition – bringing together people with disabilities and impairments to compete with and against one another – was founded by Prof. Dr. Robert Riener of ETH Zurich. At the 2016 CYBATHLON, SRF host Tobias Müller spoke five times with Prof. Dr. Oliver Bendel, a technology philosopher from Zurich, who provided context and evaluation regarding the use of implants, prosthetics, and robots. On one occasion, Prof. Dr. Lino Guzzella, then President of ETH Zurich, also took part; on another, Robert Riener joined the discussion. The most recent edition of the CYBATHLON took place in 2024. A total of 67 teams from 24 nations competed across eight disciplines at the SWISS Arena in Kloten near Zurich, as well as at seven interconnected hubs in the United States, Canada, South Africa, Hungary, Thailand, and South Korea. The website currently states: “While CYBATHLON’s journey at ETH Zürich ends here, the story is far from over. The next edition of the event may take place in Asia in 2028, marking an exciting new chapter for this unique global competition.” (CYBATHLON website) This would allow a success story to continue – once again not in Europe, but in Asia (Photo: ETH Zürich, CYBATHLON/Alessandro della Bella).

Inclusive AI and Inclusive Robotics are Highly Valued

At CES 2026, some of the most compelling examples of Inclusive AI and Inclusive Robotics came not from consumer gadgets, but from European assistive technologies designed to expand human autonomy. This was reported by FAZ and other media outlets in January 2026. These innovations show how AI-driven perception and robotics can be centered on accessibility – and still scale beyond niche use cases. Romanian startup Dotlumen exemplifies Inclusive AI through its “.lumen Glasses for the Blind,” a wearable system that replaces a guide dog with real-time, on-device intelligence. Using multiple cameras, sensors, and GPU-based computer vision, the glasses interpret sidewalks, obstacles, and spatial structures and translate them into intuitive haptic signals. The company calls this approach “Pedestrian Autonomous Driving” – a concept that directly bridges human navigation and mobile robotics. Notably, the same algorithms are now being adapted for autonomous delivery robots, underscoring the overlap between assistive AI and broader robotic autonomy. A complementary approach comes from France-based Artha (Seehaptic), whose haptic belt uses AI-powered scene understanding to convert visual space into tactile feedback. By shifting navigation cues from sound to touch, the system reduces cognitive load and leverages sensory substitution – an inclusive design principle with implications for human-machine interfaces in robotics. Together, these technologies illustrate a European model of Inclusive AI: privacy-preserving, embodied, and focused on real-world autonomy. What begins as assistive tech increasingly becomes a foundation for the next generation of intelligent, human-centered robotics (Photo: ETH Zürich, CYBATHLON/Alessandro della Bella).

SETI Instead of METI

As part of the ToBIT event series at the FHNW School of Business, four students of Prof. Dr. Oliver Bendel explored four topics related to his field of research during the Fall 2025/2026 semester: “The decoding of symbolic languages of animals”, “The decoding of animal body language”, “The decoding of animal facial expressions and behavior”, and “The decoding of extraterrestrial languages”. The students presented their papers on January 9, 2026. In some cases, the state of research was not only reviewed, but an independent position was also developed. The paper “The decoding of extraterrestrial languages” by Ilija Bralic argues that Messaging Extraterrestrial Intelligence (METI), in contrast to passive SETI (Search for Extraterrestrial Intelligence), creates a dangerous imbalance between humanity’s rapidly expanding technical capacity to send interstellar messages and its limited ethical, scientific, and political ability to govern this power responsibly. The central thesis is that the moral justifications for METI are speculative and anthropocentric, relying largely on optimistic assumptions about extraterrestrial behavior, while the potential risks are severe, logically grounded, and potentially existential. These risks include fundamental misinterpretation caused by the “human lens,” strategic dangers described by the Dark Forest hypothesis, historical patterns of harm in technologically asymmetric encounters, and profound cultural, psychological, and political disruption. The paper concludes that unilateral METI decisions by individuals or private groups are ethically indefensible and that, under the Precautionary Principle, humanity should immediately halt active transmissions. As a solution, it proposes a binding international governance framework, including a temporary global moratorium, the creation of a dedicated international authority, a strict multi-stage decision-making protocol, and robust transparency and monitoring mechanisms. This approach frames responsible restraint – not transmission – as humanity’s first genuine test of cosmic maturity.

AI and Human Creativity

As part of the AAAI Spring Symposia, the symposium “Will AI Light Up Human Creativity or Replace It?: Toward Well-Being AI for co-evolving human and machine intelligence” focuses on how advances in generative AI, large language models, and multi-agent systems are transforming human creativity and decision-making. It addresses the central question of whether AI will amplify human potential or increasingly take its place. The symposium advances the idea of Well-Being AI, emphasizing human-AI collaboration and co-evolution rather than AI as an isolated or autonomous system. While highlighting the potential of AI to support creativity, discovery, and personal development, it also examines risks such as overreliance, reduced diversity of thought, and loss of human autonomy. Chaired by Takashi Kido (see photo) of Teikyo University and Keiki Takadama of The University of Tokyo, the symposium brings together researchers and practitioners from technical, philosophical, and social disciplines to discuss principles and frameworks for AI that augments rather than replaces human creativity. Further information on this symposium and the broader AAAI Spring Symposia organized by the Association for the Advancement of Artificial Intelligence can be found at https://aaai.org/conference/spring-symposia/sss26/ and webpark2506.sakura.ne.jp/aaai/sss26-will-ai-light-up-human-creativity-or-replace-it/.

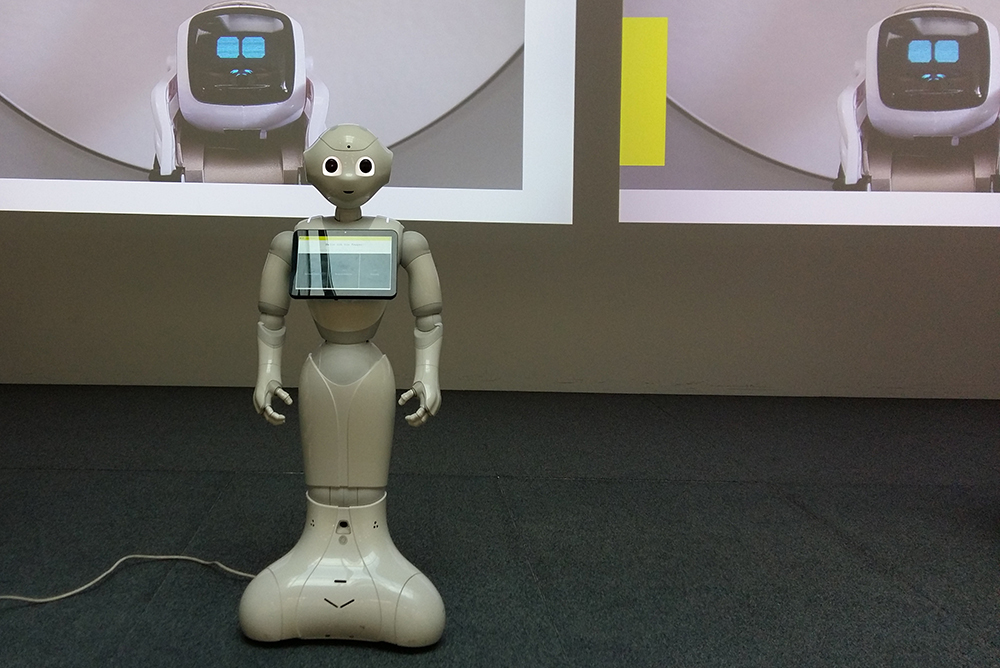

Talking to a Robot

The article “Small Talk with a Robot Reduces Stress and Improves Mood” by Katharina Kühne, Antonia L. Z. Klöffel, Oliver Bendel, and Martin H. Fischer was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part III.” From the abstract: “Research has demonstrated that social support is crucial in mitigating stress and enhancing mood. Not only do long-term, meaningful relationships contribute to well-being, but everyday social interactions, such as small talk, also offer psychological benefits. As social robots increasingly become more integrated into daily life, they present a potential avenue for stress interventions. In our online study, 98 participants underwent a stress induction using the Stroop task and were then assigned to one of three conditions: engaging in scripted small talk with a simulated NAO robot online, listening to a neutral story told by the same NAO robot, or no intervention (control condition). Results indicate that both interventions effectively reduced stress, with a tendency towards a stronger effect in the Small talk condition. Small talk not only helped maintain positive affect but also reduced negative affect. Notably, the benefits were more pronounced among individuals experiencing higher acute stress following the stress induction, but were less evident in those with chronically elevated stress levels. Furthermore, the effect of the intervention on stress reduction was mediated by changes in positive affect. These findings suggest that small talk with a social robot may serve as a promising tool for stress reduction and affect regulation.” The first author, a researcher from the University of Potsdam, presented the paper on September 12, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2398-6_1.

Robots in Space

The article “Wearable Social Robots in Space” by Tamara Siegmann and Oliver Bendel was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part I.” From the abstract: “Social robots have been developed on Earth since the 1990s. This article shows that they can also provide added value in space – particularly on a manned flight to Mars. The focus in this paper is on wearable social robots, which seem to be an obvious type due to their small size and low weight. First, the environment and situation of the astronauts are described. Then, using AIBI as an example, it is shown how it fits into these conditions and requirements and what tasks it can perform. Possible further developments and improvements of a wearable social robot are also mentioned in this context. It becomes clear that a model like AIBI is well suited to accompany astronauts on a Mars flight. However, further developments and improvements in interaction and communication are desirable before application.” The Swiss student presented the paper together with her professor on September 10, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2379-5_33.

Towards Inclusive AI and Inclusive Robotics

The article “Wearable Social Robots for the Disabled and Impaired” by Oliver Bendel was published on December 23, 2025. It is part of the volume “Social Robotics + AI: 17th International Conference, ICSR+AI 2025, Naples, Italy, September 10–12, 2025, Proceedings, Part III.” From the abstract: “Wearable social robots can be found on a chain around the neck, on clothing, or in a shirt or jacket pocket. Due to their constant availability and responsiveness, they can support the disabled and impaired in a variety of ways and improve their lives. This article first identifies and summarizes robotic and artificial intelligence functions of wearable social robots. It then derives and categorizes areas of application. Following this, the opportunities and risks, such as those relating to privacy and intimacy, are highlighted. Overall, it emerges that wearable social robots can be useful for this group, for example, by providing care and information anywhere and at any time. However, significant improvements are still needed to overcome existing shortcomings.” The technology philosopher presented the paper on September 12, 2025, in Naples. It can be downloaded from link.springer.com/chapter/10.1007/978-981-95-2398-6_8.

The Rise of General-purpose Robots

The paper “The Universal Robot of the 21st Century” by Oliver Bendel was published in February 2025 in the proceedings volume “Social Robots with AI: Prospects, Risks, and Responsible Methods” … From the abstract: “Developments in several areas of computer science, robotics, and social robotics make it seem likely that a universal robot will be available in the foreseeable future. Large language models for communication, perception, and control play a central role in this. This article briefly outlines the developments in the various areas and uses them to create the overall image of the universal robot. It then discusses the associated challenges from an ethical and social science perspective. It can be said that the universal robot will bring with it new possibilities and will perhaps be one of the most powerful human tools in physical space. At the same time, numerous problems are foreseeable, individual, social, and ecological.” The proceedings volume comprises the papers presented at Robophilosophy 2024 in Aarhus. Leading philosophers, computer scientists and roboticists met there in August. Like the ICSR, the conference is one of the world’s leading conferences on social robotics. General-purpose robots, the predecessors of universal robots, have now become widespread, as exemplified by Digit, Apollo, and Figure 03. The author accepted manuscript of this article is therefore being made freely available on this site for non-commercial use only and with no derivatives, in line with the publisher’s self-archiving policy.